Upload folder using huggingface_hub

Browse files- .gitattributes +0 -6

- README.md +230 -0

- chat_template.json +3 -0

- config.json +81 -0

- configuration.json +1 -0

- generation_config.json +14 -0

- merges.txt +0 -0

- model-00001-of-00006.safetensors +3 -0

- model-00002-of-00006.safetensors +3 -0

- model-00003-of-00006.safetensors +3 -0

- model-00004-of-00006.safetensors +3 -0

- model-00005-of-00006.safetensors +3 -0

- model-00006-of-00006.safetensors +3 -0

- model.safetensors.index.json +0 -0

- preprocessor_config.json +21 -0

- tokenizer.json +0 -0

- tokenizer_config.json +239 -0

- video_preprocessor_config.json +21 -0

- vocab.json +0 -0

.gitattributes

CHANGED

|

@@ -33,9 +33,3 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

-

blobs/14c74063c6f61e207573c6521ca77456db1bcb9c4641fbc1ebfc3bd7a3ed2980 filter=lfs diff=lfs merge=lfs -text

|

| 37 |

-

blobs/3d1f9b536862314823d0ebae38ff4fad2e2fbd8d5c0c7747f7d3272a624699d2 filter=lfs diff=lfs merge=lfs -text

|

| 38 |

-

blobs/797ecde7495593d55121235a6bb2cc484a0eaf110b6ba74b30797700898d7e27 filter=lfs diff=lfs merge=lfs -text

|

| 39 |

-

blobs/ad04cbd1635a7af08a79f08a39792a317caf494100dda7a09a7d219ba2f91af4 filter=lfs diff=lfs merge=lfs -text

|

| 40 |

-

blobs/e4299ea234b36965055a2a31282fc29ea3f37928970e421844d779cb17b0fa57 filter=lfs diff=lfs merge=lfs -text

|

| 41 |

-

blobs/f1b85a58d250a7684d910ebe5804b54baf3c779d36620ff6da40c0a56fea57b6 filter=lfs diff=lfs merge=lfs -text

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

README.md

ADDED

|

@@ -0,0 +1,230 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

library_name: transformers

|

| 3 |

+

license: apache-2.0

|

| 4 |

+

pipeline_tag: text-generation

|

| 5 |

+

tags:

|

| 6 |

+

- AWQ

|

| 7 |

+

- vLLM

|

| 8 |

+

base_model:

|

| 9 |

+

- Qwen/Qwen3-VL-30B-A3B-Instruct

|

| 10 |

+

base_model_relation: quantized

|

| 11 |

+

---

|

| 12 |

+

# Qwen3-VL-30B-A3B-Instruct-AWQ

|

| 13 |

+

Base Model: [Qwen/Qwen3-VL-30B-A3B-Instruct](https://www.modelscope.cn/models/Qwen/Qwen3-VL-30B-A3B-Instruct)

|

| 14 |

+

|

| 15 |

+

### 【Dependencies / Installation】

|

| 16 |

+

As of **2025-10-08**, create a fresh Python environment and run:

|

| 17 |

+

```bash

|

| 18 |

+

uv venv

|

| 19 |

+

source .venv/bin/activate

|

| 20 |

+

|

| 21 |

+

# Install vLLM >=0.11.0

|

| 22 |

+

uv pip install -U vllm

|

| 23 |

+

|

| 24 |

+

# Install Qwen-VL utility library (recommended for offline inference)

|

| 25 |

+

uv pip install qwen-vl-utils==0.0.14

|

| 26 |

+

```

|

| 27 |

+

|

| 28 |

+

For more details, refer to [vLLM Official Qwen3-VL Guide](https://docs.vllm.ai/projects/recipes/en/latest/Qwen/Qwen3-VL.html)

|

| 29 |

+

|

| 30 |

+

### 【vLLM Startup Command】

|

| 31 |

+

|

| 32 |

+

```

|

| 33 |

+

CONTEXT_LENGTH=32768

|

| 34 |

+

|

| 35 |

+

vllm serve \

|

| 36 |

+

tclf90/Qwen3-VL-30B-A3B-Instruct-AWQ \

|

| 37 |

+

--served-model-name My_Model \

|

| 38 |

+

--swap-space 4 \

|

| 39 |

+

--max-num-seqs 8 \

|

| 40 |

+

--max-model-len $CONTEXT_LENGTH \

|

| 41 |

+

--gpu-memory-utilization 0.9 \

|

| 42 |

+

--tensor-parallel-size 2 \

|

| 43 |

+

--trust-remote-code \

|

| 44 |

+

--disable-log-requests \

|

| 45 |

+

--host 0.0.0.0 \

|

| 46 |

+

--port 8000

|

| 47 |

+

```

|

| 48 |

+

|

| 49 |

+

### 【Logs】

|

| 50 |

+

```

|

| 51 |

+

2025-10-04

|

| 52 |

+

1. Initial commit

|

| 53 |

+

```

|

| 54 |

+

|

| 55 |

+

### 【Model Files】

|

| 56 |

+

| File Size | Last Updated |

|

| 57 |

+

|-----------|--------------|

|

| 58 |

+

| `17GB` | `2025-10-04` |

|

| 59 |

+

|

| 60 |

+

### 【Model Download】

|

| 61 |

+

```python

|

| 62 |

+

from modelscope import snapshot_download

|

| 63 |

+

snapshot_download('tclf90/Qwen3-VL-30B-A3B-Instruct-AWQ', cache_dir="your_local_path")

|

| 64 |

+

```

|

| 65 |

+

|

| 66 |

+

### 【Overview】

|

| 67 |

+

<a href="https://chat.qwenlm.ai/" target="_blank" style="margin: 2px;">

|

| 68 |

+

<img alt="Chat" src="https://img.shields.io/badge/%F0%9F%92%9C%EF%B8%8F%20Qwen%20Chat%20-536af5" style="display: inline-block; vertical-align: middle;"/>

|

| 69 |

+

</a>

|

| 70 |

+

|

| 71 |

+

|

| 72 |

+

# Qwen3-VL-30B-A3B-Instruct

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

Meet Qwen3-VL — the most powerful vision-language model in the Qwen series to date.

|

| 76 |

+

|

| 77 |

+

This generation delivers comprehensive upgrades across the board: superior text understanding & generation, deeper visual perception & reasoning, extended context length, enhanced spatial and video dynamics comprehension, and stronger agent interaction capabilities.

|

| 78 |

+

|

| 79 |

+

Available in Dense and MoE architectures that scale from edge to cloud, with Instruct and reasoning‑enhanced Thinking editions for flexible, on‑demand deployment.

|

| 80 |

+

|

| 81 |

+

|

| 82 |

+

#### Key Enhancements:

|

| 83 |

+

|

| 84 |

+

* **Visual Agent**: Operates PC/mobile GUIs—recognizes elements, understands functions, invokes tools, completes tasks.

|

| 85 |

+

|

| 86 |

+

* **Visual Coding Boost**: Generates Draw.io/HTML/CSS/JS from images/videos.

|

| 87 |

+

|

| 88 |

+

* **Advanced Spatial Perception**: Judges object positions, viewpoints, and occlusions; provides stronger 2D grounding and enables 3D grounding for spatial reasoning and embodied AI.

|

| 89 |

+

|

| 90 |

+

* **Long Context & Video Understanding**: Native 256K context, expandable to 1M; handles books and hours-long video with full recall and second-level indexing.

|

| 91 |

+

|

| 92 |

+

* **Enhanced Multimodal Reasoning**: Excels in STEM/Math—causal analysis and logical, evidence-based answers.

|

| 93 |

+

|

| 94 |

+

* **Upgraded Visual Recognition**: Broader, higher-quality pretraining is able to “recognize everything”—celebrities, anime, products, landmarks, flora/fauna, etc.

|

| 95 |

+

|

| 96 |

+

* **Expanded OCR**: Supports 32 languages (up from 19); robust in low light, blur, and tilt; better with rare/ancient characters and jargon; improved long-document structure parsing.

|

| 97 |

+

|

| 98 |

+

* **Text Understanding on par with pure LLMs**: Seamless text–vision fusion for lossless, unified comprehension.

|

| 99 |

+

|

| 100 |

+

|

| 101 |

+

#### Model Architecture Updates:

|

| 102 |

+

|

| 103 |

+

<p align="center">

|

| 104 |

+

<img src="https://qianwen-res.oss-accelerate.aliyuncs.com/Qwen3-VL/qwen3vl_arc.jpg" width="80%"/>

|

| 105 |

+

<p>

|

| 106 |

+

|

| 107 |

+

|

| 108 |

+

1. **Interleaved-MRoPE**: Full‑frequency allocation over time, width, and height via robust positional embeddings, enhancing long‑horizon video reasoning.

|

| 109 |

+

|

| 110 |

+

2. **DeepStack**: Fuses multi‑level ViT features to capture fine‑grained details and sharpen image–text alignment.

|

| 111 |

+

|

| 112 |

+

3. **Text–Timestamp Alignment:** Moves beyond T‑RoPE to precise, timestamp‑grounded event localization for stronger video temporal modeling.

|

| 113 |

+

|

| 114 |

+

This is the weight repository for Qwen3-VL-30B-A3B-Instruct.

|

| 115 |

+

|

| 116 |

+

|

| 117 |

+

---

|

| 118 |

+

|

| 119 |

+

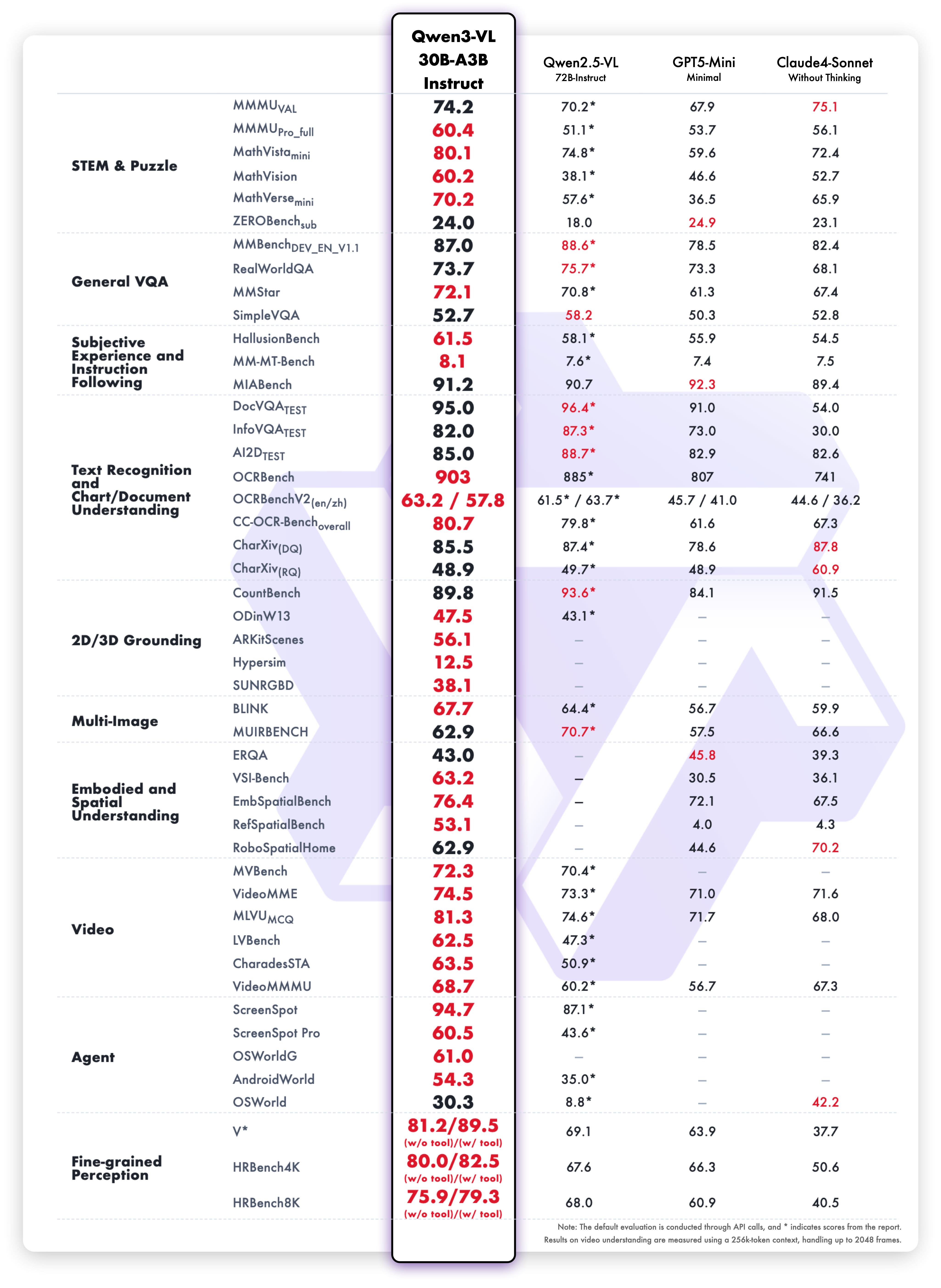

## Model Performance

|

| 120 |

+

|

| 121 |

+

**Multimodal performance**

|

| 122 |

+

|

| 123 |

+

|

| 124 |

+

|

| 125 |

+

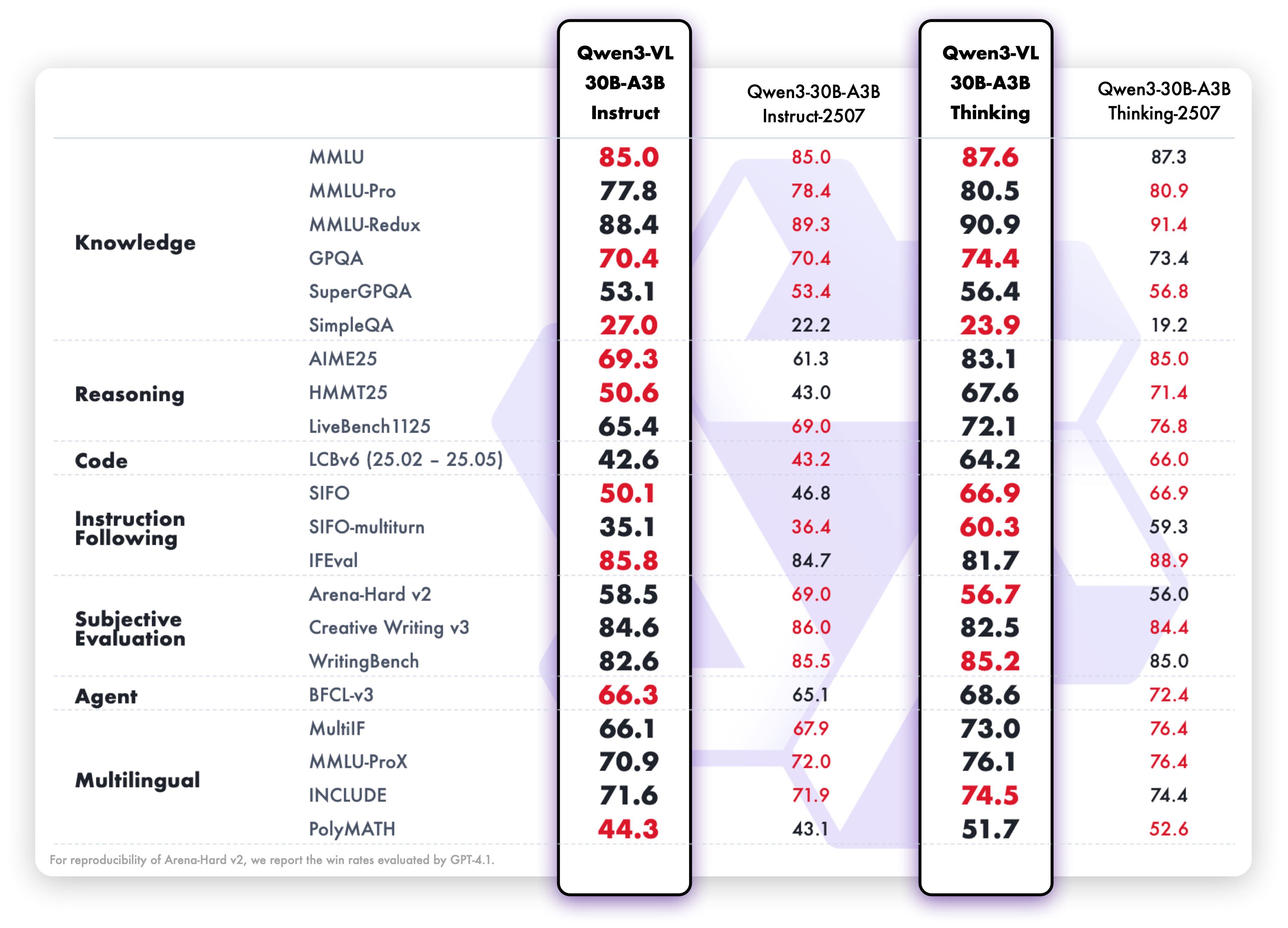

**Pure text performance**

|

| 126 |

+

|

| 127 |

+

|

| 128 |

+

## Quickstart

|

| 129 |

+

|

| 130 |

+

Below, we provide simple examples to show how to use Qwen3-VL with 🤖 ModelScope and 🤗 Transformers.

|

| 131 |

+

|

| 132 |

+

The code of Qwen3-VL has been in the latest Hugging Face transformers and we advise you to build from source with command:

|

| 133 |

+

```

|

| 134 |

+

pip install git+https://github.com/huggingface/transformers

|

| 135 |

+

# pip install transformers==4.57.0 # currently, V4.57.0 is not released

|

| 136 |

+

```

|

| 137 |

+

|

| 138 |

+

### Using 🤗 Transformers to Chat

|

| 139 |

+

|

| 140 |

+

Here we show a code snippet to show how to use the chat model with `transformers`:

|

| 141 |

+

|

| 142 |

+

```python

|

| 143 |

+

from transformers import Qwen3VLMoeForConditionalGeneration, AutoProcessor

|

| 144 |

+

|

| 145 |

+

# default: Load the model on the available device(s)

|

| 146 |

+

model = Qwen3VLMoeForConditionalGeneration.from_pretrained(

|

| 147 |

+

"Qwen/Qwen3-VL-30B-A3B-Instruct", dtype="auto", device_map="auto"

|

| 148 |

+

)

|

| 149 |

+

|

| 150 |

+

# We recommend enabling flash_attention_2 for better acceleration and memory saving, especially in multi-image and video scenarios.

|

| 151 |

+

# model = Qwen3VLMoeForConditionalGeneration.from_pretrained(

|

| 152 |

+

# "Qwen/Qwen3-VL-30B-A3B-Instruct",

|

| 153 |

+

# dtype=torch.bfloat16,

|

| 154 |

+

# attn_implementation="flash_attention_2",

|

| 155 |

+

# device_map="auto",

|

| 156 |

+

# )

|

| 157 |

+

|

| 158 |

+

processor = AutoProcessor.from_pretrained("Qwen/Qwen3-VL-30B-A3B-Instruct")

|

| 159 |

+

|

| 160 |

+

messages = [

|

| 161 |

+

{

|

| 162 |

+

"role": "user",

|

| 163 |

+

"content": [

|

| 164 |

+

{

|

| 165 |

+

"type": "image",

|

| 166 |

+

"image": "https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen-VL/assets/demo.jpeg",

|

| 167 |

+

},

|

| 168 |

+

{"type": "text", "text": "Describe this image."},

|

| 169 |

+

],

|

| 170 |

+

}

|

| 171 |

+

]

|

| 172 |

+

|

| 173 |

+

# Preparation for inference

|

| 174 |

+

inputs = processor.apply_chat_template(

|

| 175 |

+

messages,

|

| 176 |

+

tokenize=True,

|

| 177 |

+

add_generation_prompt=True,

|

| 178 |

+

return_dict=True,

|

| 179 |

+

return_tensors="pt"

|

| 180 |

+

)

|

| 181 |

+

|

| 182 |

+

# Inference: Generation of the output

|

| 183 |

+

generated_ids = model.generate(**inputs, max_new_tokens=128)

|

| 184 |

+

generated_ids_trimmed = [

|

| 185 |

+

out_ids[len(in_ids) :] for in_ids, out_ids in zip(inputs.input_ids, generated_ids)

|

| 186 |

+

]

|

| 187 |

+

output_text = processor.batch_decode(

|

| 188 |

+

generated_ids_trimmed, skip_special_tokens=True, clean_up_tokenization_spaces=False

|

| 189 |

+

)

|

| 190 |

+

print(output_text)

|

| 191 |

+

```

|

| 192 |

+

|

| 193 |

+

|

| 194 |

+

|

| 195 |

+

## Citation

|

| 196 |

+

|

| 197 |

+

If you find our work helpful, feel free to give us a cite.

|

| 198 |

+

|

| 199 |

+

```

|

| 200 |

+

@misc{qwen3technicalreport,

|

| 201 |

+

title={Qwen3 Technical Report},

|

| 202 |

+

author={Qwen Team},

|

| 203 |

+

year={2025},

|

| 204 |

+

eprint={2505.09388},

|

| 205 |

+

archivePrefix={arXiv},

|

| 206 |

+

primaryClass={cs.CL},

|

| 207 |

+

url={https://arxiv.org/abs/2505.09388},

|

| 208 |

+

}

|

| 209 |

+

|

| 210 |

+

@article{Qwen2.5-VL,

|

| 211 |

+

title={Qwen2.5-VL Technical Report},

|

| 212 |

+

author={Bai, Shuai and Chen, Keqin and Liu, Xuejing and Wang, Jialin and Ge, Wenbin and Song, Sibo and Dang, Kai and Wang, Peng and Wang, Shijie and Tang, Jun and Zhong, Humen and Zhu, Yuanzhi and Yang, Mingkun and Li, Zhaohai and Wan, Jianqiang and Wang, Pengfei and Ding, Wei and Fu, Zheren and Xu, Yiheng and Ye, Jiabo and Zhang, Xi and Xie, Tianbao and Cheng, Zesen and Zhang, Hang and Yang, Zhibo and Xu, Haiyang and Lin, Junyang},

|

| 213 |

+

journal={arXiv preprint arXiv:2502.13923},

|

| 214 |

+

year={2025}

|

| 215 |

+

}

|

| 216 |

+

|

| 217 |

+

@article{Qwen2VL,

|

| 218 |

+

title={Qwen2-VL: Enhancing Vision-Language Model's Perception of the World at Any Resolution},

|

| 219 |

+

author={Wang, Peng and Bai, Shuai and Tan, Sinan and Wang, Shijie and Fan, Zhihao and Bai, Jinze and Chen, Keqin and Liu, Xuejing and Wang, Jialin and Ge, Wenbin and Fan, Yang and Dang, Kai and Du, Mengfei and Ren, Xuancheng and Men, Rui and Liu, Dayiheng and Zhou, Chang and Zhou, Jingren and Lin, Junyang},

|

| 220 |

+

journal={arXiv preprint arXiv:2409.12191},

|

| 221 |

+

year={2024}

|

| 222 |

+

}

|

| 223 |

+

|

| 224 |

+

@article{Qwen-VL,

|

| 225 |

+

title={Qwen-VL: A Versatile Vision-Language Model for Understanding, Localization, Text Reading, and Beyond},

|

| 226 |

+

author={Bai, Jinze and Bai, Shuai and Yang, Shusheng and Wang, Shijie and Tan, Sinan and Wang, Peng and Lin, Junyang and Zhou, Chang and Zhou, Jingren},

|

| 227 |

+

journal={arXiv preprint arXiv:2308.12966},

|

| 228 |

+

year={2023}

|

| 229 |

+

}

|

| 230 |

+

```

|

chat_template.json

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"chat_template": "{%- if tools %}\n {{- '<|im_start|>system\\n' }}\n {%- if messages[0].role == 'system' %}\n {%- if messages[0].content is string %}\n {{- messages[0].content }}\n {%- else %}\n {%- for content in messages[0].content %}\n {%- if 'text' in content %}\n {{- content.text }}\n {%- endif %}\n {%- endfor %}\n {%- endif %}\n {{- '\\n\\n' }}\n {%- endif %}\n {{- \"# Tools\\n\\nYou may call one or more functions to assist with the user query.\\n\\nYou are provided with function signatures within <tools></tools> XML tags:\\n<tools>\" }}\n {%- for tool in tools %}\n {{- \"\\n\" }}\n {{- tool | tojson }}\n {%- endfor %}\n {{- \"\\n</tools>\\n\\nFor each function call, return a json object with function name and arguments within <tool_call></tool_call> XML tags:\\n<tool_call>\\n{\\\"name\\\": <function-name>, \\\"arguments\\\": <args-json-object>}\\n</tool_call><|im_end|>\\n\" }}\n{%- else %}\n {%- if messages[0].role == 'system' %}\n {{- '<|im_start|>system\\n' }}\n {%- if messages[0].content is string %}\n {{- messages[0].content }}\n {%- else %}\n {%- for content in messages[0].content %}\n {%- if 'text' in content %}\n {{- content.text }}\n {%- endif %}\n {%- endfor %}\n {%- endif %}\n {{- '<|im_end|>\\n' }}\n {%- endif %}\n{%- endif %}\n{%- set image_count = namespace(value=0) %}\n{%- set video_count = namespace(value=0) %}\n{%- for message in messages %}\n {%- if message.role == \"user\" %}\n {{- '<|im_start|>' + message.role + '\\n' }}\n {%- if message.content is string %}\n {{- message.content }}\n {%- else %}\n {%- for content in message.content %}\n {%- if content.type == 'image' or 'image' in content or 'image_url' in content %}\n {%- set image_count.value = image_count.value + 1 %}\n {%- if add_vision_id %}Picture {{ image_count.value }}: {% endif -%}\n <|vision_start|><|image_pad|><|vision_end|>\n {%- elif content.type == 'video' or 'video' in content %}\n {%- set video_count.value = video_count.value + 1 %}\n {%- if add_vision_id %}Video {{ video_count.value }}: {% endif -%}\n <|vision_start|><|video_pad|><|vision_end|>\n {%- elif 'text' in content %}\n {{- content.text }}\n {%- endif %}\n {%- endfor %}\n {%- endif %}\n {{- '<|im_end|>\\n' }}\n {%- elif message.role == \"assistant\" %}\n {{- '<|im_start|>' + message.role + '\\n' }}\n {%- if message.content is string %}\n {{- message.content }}\n {%- else %}\n {%- for content_item in message.content %}\n {%- if 'text' in content_item %}\n {{- content_item.text }}\n {%- endif %}\n {%- endfor %}\n {%- endif %}\n {%- if message.tool_calls %}\n {%- for tool_call in message.tool_calls %}\n {%- if (loop.first and message.content) or (not loop.first) %}\n {{- '\\n' }}\n {%- endif %}\n {%- if tool_call.function %}\n {%- set tool_call = tool_call.function %}\n {%- endif %}\n {{- '<tool_call>\\n{\"name\": \"' }}\n {{- tool_call.name }}\n {{- '\", \"arguments\": ' }}\n {%- if tool_call.arguments is string %}\n {{- tool_call.arguments }}\n {%- else %}\n {{- tool_call.arguments | tojson }}\n {%- endif %}\n {{- '}\\n</tool_call>' }}\n {%- endfor %}\n {%- endif %}\n {{- '<|im_end|>\\n' }}\n {%- elif message.role == \"tool\" %}\n {%- if loop.first or (messages[loop.index0 - 1].role != \"tool\") %}\n {{- '<|im_start|>user' }}\n {%- endif %}\n {{- '\\n<tool_response>\\n' }}\n {%- if message.content is string %}\n {{- message.content }}\n {%- else %}\n {%- for content in message.content %}\n {%- if content.type == 'image' or 'image' in content or 'image_url' in content %}\n {%- set image_count.value = image_count.value + 1 %}\n {%- if add_vision_id %}Picture {{ image_count.value }}: {% endif -%}\n <|vision_start|><|image_pad|><|vision_end|>\n {%- elif content.type == 'video' or 'video' in content %}\n {%- set video_count.value = video_count.value + 1 %}\n {%- if add_vision_id %}Video {{ video_count.value }}: {% endif -%}\n <|vision_start|><|video_pad|><|vision_end|>\n {%- elif 'text' in content %}\n {{- content.text }}\n {%- endif %}\n {%- endfor %}\n {%- endif %}\n {{- '\\n</tool_response>' }}\n {%- if loop.last or (messages[loop.index0 + 1].role != \"tool\") %}\n {{- '<|im_end|>\\n' }}\n {%- endif %}\n {%- endif %}\n{%- endfor %}\n{%- if add_generation_prompt %}\n {{- '<|im_start|>assistant\\n' }}\n{%- endif %}\n"

|

| 3 |

+

}

|

config.json

ADDED

|

@@ -0,0 +1,81 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name_or_path": "tclf90/Qwen3-VL-30B-A3B-Instruct-AWQ",

|

| 3 |

+

"architectures": [

|

| 4 |

+

"Qwen3VLMoeForConditionalGeneration"

|

| 5 |

+

],

|

| 6 |

+

"image_token_id": 151655,

|

| 7 |

+

"model_type": "qwen3_vl_moe",

|

| 8 |

+

"text_config": {

|

| 9 |

+

"attention_bias": false,

|

| 10 |

+

"attention_dropout": 0.0,

|

| 11 |

+

"bos_token_id": 151643,

|

| 12 |

+

"decoder_sparse_step": 1,

|

| 13 |

+

"dtype": "bfloat16",

|

| 14 |

+

"eos_token_id": 151645,

|

| 15 |

+

"head_dim": 128,

|

| 16 |

+

"hidden_act": "silu",

|

| 17 |

+

"hidden_size": 2048,

|

| 18 |

+

"initializer_range": 0.02,

|

| 19 |

+

"intermediate_size": 6144,

|

| 20 |

+

"max_position_embeddings": 262144,

|

| 21 |

+

"mlp_only_layers": [],

|

| 22 |

+

"model_type": "qwen3_vl_moe_text",

|

| 23 |

+

"moe_intermediate_size": 768,

|

| 24 |

+

"norm_topk_prob": true,

|

| 25 |

+

"num_attention_heads": 32,

|

| 26 |

+

"num_experts": 128,

|

| 27 |

+

"num_experts_per_tok": 8,

|

| 28 |

+

"num_hidden_layers": 48,

|

| 29 |

+

"num_key_value_heads": 4,

|

| 30 |

+

"rms_norm_eps": 1e-06,

|

| 31 |

+

"rope_scaling": {

|

| 32 |

+

"mrope_interleaved": true,

|

| 33 |

+

"mrope_section": [

|

| 34 |

+

24,

|

| 35 |

+

20,

|

| 36 |

+

20

|

| 37 |

+

],

|

| 38 |

+

"rope_type": "default"

|

| 39 |

+

},

|

| 40 |

+

"rope_theta": 5000000,

|

| 41 |

+

"use_cache": true,

|

| 42 |

+

"vocab_size": 151936

|

| 43 |

+

},

|

| 44 |

+

"tie_word_embeddings": false,

|

| 45 |

+

"transformers_version": "4.57.0.dev0",

|

| 46 |

+

"video_token_id": 151656,

|

| 47 |

+

"vision_config": {

|

| 48 |

+

"deepstack_visual_indexes": [

|

| 49 |

+

8,

|

| 50 |

+

16,

|

| 51 |

+

24

|

| 52 |

+

],

|

| 53 |

+

"depth": 27,

|

| 54 |

+

"hidden_act": "gelu_pytorch_tanh",

|

| 55 |

+

"hidden_size": 1152,

|

| 56 |

+

"in_channels": 3,

|

| 57 |

+

"initializer_range": 0.02,

|

| 58 |

+

"intermediate_size": 4304,

|

| 59 |

+

"model_type": "qwen3_vl_moe",

|

| 60 |

+

"num_heads": 16,

|

| 61 |

+

"num_position_embeddings": 2304,

|

| 62 |

+

"out_hidden_size": 2048,

|

| 63 |

+

"patch_size": 16,

|

| 64 |

+

"spatial_merge_size": 2,

|

| 65 |

+

"temporal_patch_size": 2

|

| 66 |

+

},

|

| 67 |

+

"vision_end_token_id": 151653,

|

| 68 |

+

"vision_start_token_id": 151652,

|

| 69 |

+

"torch_dtype": "float16",

|

| 70 |

+

"quantization_config": {

|

| 71 |

+

"quant_method": "awq",

|

| 72 |

+

"bits": 4,

|

| 73 |

+

"group_size": 128,

|

| 74 |

+

"version": "gemm",

|

| 75 |

+

"zero_point": true,

|

| 76 |

+

"modules_to_not_convert": [

|

| 77 |

+

"visual",

|

| 78 |

+

"mlp.gate"

|

| 79 |

+

]

|

| 80 |

+

}

|

| 81 |

+

}

|

configuration.json

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

{"framework":"Pytorch","task":"image-text-to-text"}

|

generation_config.json

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"bos_token_id": 151643,

|

| 3 |

+

"pad_token_id": 151643,

|

| 4 |

+

"do_sample": true,

|

| 5 |

+

"eos_token_id": [

|

| 6 |

+

151645,

|

| 7 |

+

151643

|

| 8 |

+

],

|

| 9 |

+

"top_p": 0.8,

|

| 10 |

+

"top_k": 20,

|

| 11 |

+

"temperature": 0.7,

|

| 12 |

+

"repetition_penalty": 1.0,

|

| 13 |

+

"transformers_version": "4.56.0"

|

| 14 |

+

}

|

merges.txt

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

model-00001-of-00006.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:e8345a70ba4f4f868a63a851136593812ec3d491b4dca4d13be6e9bd062fb6de

|

| 3 |

+

size 135

|

model-00002-of-00006.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:4eee4a42defb1cdb1719f6fcac127e0ecbf1f399f3205da7faf34a0d65dd57c4

|

| 3 |

+

size 135

|

model-00003-of-00006.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:0a8f7d2475360707307238ec041282f6466b871a016ba4addfbe09e23e96a53b

|

| 3 |

+

size 135

|

model-00004-of-00006.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:c3d4f27422d671ec26772a93ec6d460185dee4bd746666f71b2a8c82a6e7f5e7

|

| 3 |

+

size 135

|

model-00005-of-00006.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:7c2ac164b5b39b481db10ae01b3dfd6e5eba5c4fb3c497dca6788dbf16d637a2

|

| 3 |

+

size 135

|

model-00006-of-00006.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:96fa21738e1f36f2f84f1be28ca54e56823a2a8a22f4149548e7bc0c291ae3a8

|

| 3 |

+

size 135

|

model.safetensors.index.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

preprocessor_config.json

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"size": {

|

| 3 |

+

"longest_edge": 16777216,

|

| 4 |

+

"shortest_edge": 65536

|

| 5 |

+

},

|

| 6 |

+

"patch_size": 16,

|

| 7 |

+

"temporal_patch_size": 2,

|

| 8 |

+

"merge_size": 2,

|

| 9 |

+

"image_mean": [

|

| 10 |

+

0.5,

|

| 11 |

+

0.5,

|

| 12 |

+

0.5

|

| 13 |

+

],

|

| 14 |

+

"image_std": [

|

| 15 |

+

0.5,

|

| 16 |

+

0.5,

|

| 17 |

+

0.5

|

| 18 |

+

],

|

| 19 |

+

"processor_class": "Qwen3VLProcessor",

|

| 20 |

+

"image_processor_type": "Qwen2VLImageProcessorFast"

|

| 21 |

+

}

|

tokenizer.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

tokenizer_config.json

ADDED

|

@@ -0,0 +1,239 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"add_bos_token": false,

|

| 3 |

+

"add_prefix_space": false,

|

| 4 |

+

"added_tokens_decoder": {

|

| 5 |

+

"151643": {

|

| 6 |

+

"content": "<|endoftext|>",

|

| 7 |

+

"lstrip": false,

|

| 8 |

+

"normalized": false,

|

| 9 |

+

"rstrip": false,

|

| 10 |

+

"single_word": false,

|

| 11 |

+

"special": true

|

| 12 |

+

},

|

| 13 |

+

"151644": {

|

| 14 |

+

"content": "<|im_start|>",

|

| 15 |

+

"lstrip": false,

|

| 16 |

+

"normalized": false,

|

| 17 |

+

"rstrip": false,

|

| 18 |

+

"single_word": false,

|

| 19 |

+

"special": true

|

| 20 |

+

},

|

| 21 |

+

"151645": {

|

| 22 |

+

"content": "<|im_end|>",

|

| 23 |

+

"lstrip": false,

|

| 24 |

+

"normalized": false,

|

| 25 |

+

"rstrip": false,

|

| 26 |

+

"single_word": false,

|

| 27 |

+

"special": true

|

| 28 |

+

},

|

| 29 |

+

"151646": {

|

| 30 |

+

"content": "<|object_ref_start|>",

|

| 31 |

+

"lstrip": false,

|

| 32 |

+

"normalized": false,

|

| 33 |

+

"rstrip": false,

|

| 34 |

+

"single_word": false,

|

| 35 |

+

"special": true

|

| 36 |

+

},

|

| 37 |

+

"151647": {

|

| 38 |

+

"content": "<|object_ref_end|>",

|

| 39 |

+

"lstrip": false,

|

| 40 |

+

"normalized": false,

|

| 41 |

+

"rstrip": false,

|

| 42 |

+

"single_word": false,

|

| 43 |

+

"special": true

|

| 44 |

+

},

|

| 45 |

+

"151648": {

|

| 46 |

+

"content": "<|box_start|>",

|

| 47 |

+

"lstrip": false,

|

| 48 |

+

"normalized": false,

|

| 49 |

+

"rstrip": false,

|

| 50 |

+

"single_word": false,

|

| 51 |

+

"special": true

|

| 52 |

+

},

|

| 53 |

+

"151649": {

|

| 54 |

+

"content": "<|box_end|>",

|

| 55 |

+

"lstrip": false,

|

| 56 |

+

"normalized": false,

|

| 57 |

+

"rstrip": false,

|

| 58 |

+

"single_word": false,

|

| 59 |

+

"special": true

|

| 60 |

+

},

|

| 61 |

+

"151650": {

|

| 62 |

+

"content": "<|quad_start|>",

|

| 63 |

+

"lstrip": false,

|

| 64 |

+

"normalized": false,

|

| 65 |

+

"rstrip": false,

|

| 66 |

+

"single_word": false,

|

| 67 |

+

"special": true

|

| 68 |

+

},

|

| 69 |

+

"151651": {

|

| 70 |

+

"content": "<|quad_end|>",

|

| 71 |

+

"lstrip": false,

|

| 72 |

+

"normalized": false,

|

| 73 |

+

"rstrip": false,

|

| 74 |

+

"single_word": false,

|

| 75 |

+

"special": true

|

| 76 |

+

},

|

| 77 |

+

"151652": {

|

| 78 |

+

"content": "<|vision_start|>",

|

| 79 |

+

"lstrip": false,

|

| 80 |

+

"normalized": false,

|

| 81 |

+

"rstrip": false,

|

| 82 |

+

"single_word": false,

|

| 83 |

+

"special": true

|

| 84 |

+

},

|

| 85 |

+

"151653": {

|

| 86 |

+

"content": "<|vision_end|>",

|

| 87 |

+

"lstrip": false,

|

| 88 |

+

"normalized": false,

|

| 89 |

+

"rstrip": false,

|

| 90 |

+

"single_word": false,

|

| 91 |

+

"special": true

|

| 92 |

+

},

|

| 93 |

+

"151654": {

|

| 94 |

+

"content": "<|vision_pad|>",

|

| 95 |

+

"lstrip": false,

|

| 96 |

+

"normalized": false,

|

| 97 |

+

"rstrip": false,

|

| 98 |

+

"single_word": false,

|

| 99 |

+

"special": true

|

| 100 |

+

},

|

| 101 |

+

"151655": {

|

| 102 |

+

"content": "<|image_pad|>",

|

| 103 |

+

"lstrip": false,

|

| 104 |

+

"normalized": false,

|

| 105 |

+

"rstrip": false,

|

| 106 |

+

"single_word": false,

|

| 107 |

+

"special": true

|

| 108 |

+

},

|

| 109 |

+

"151656": {

|

| 110 |

+

"content": "<|video_pad|>",

|

| 111 |

+

"lstrip": false,

|

| 112 |

+

"normalized": false,

|

| 113 |

+

"rstrip": false,

|

| 114 |

+

"single_word": false,

|

| 115 |

+

"special": true

|

| 116 |

+

},

|

| 117 |

+

"151657": {

|

| 118 |

+

"content": "<tool_call>",

|

| 119 |

+

"lstrip": false,

|

| 120 |

+

"normalized": false,

|

| 121 |

+

"rstrip": false,

|

| 122 |

+

"single_word": false,

|

| 123 |

+

"special": false

|

| 124 |

+

},

|

| 125 |

+

"151658": {

|

| 126 |

+

"content": "</tool_call>",

|

| 127 |

+

"lstrip": false,

|

| 128 |

+

"normalized": false,

|

| 129 |

+

"rstrip": false,

|

| 130 |

+

"single_word": false,

|

| 131 |

+

"special": false

|

| 132 |

+

},

|

| 133 |

+

"151659": {

|

| 134 |

+

"content": "<|fim_prefix|>",

|

| 135 |

+

"lstrip": false,

|

| 136 |

+

"normalized": false,

|

| 137 |

+

"rstrip": false,

|

| 138 |

+

"single_word": false,

|

| 139 |

+

"special": false

|

| 140 |

+

},

|

| 141 |

+

"151660": {

|

| 142 |

+

"content": "<|fim_middle|>",

|

| 143 |

+

"lstrip": false,

|

| 144 |

+

"normalized": false,

|

| 145 |

+

"rstrip": false,

|

| 146 |

+

"single_word": false,

|

| 147 |

+

"special": false

|

| 148 |

+

},

|

| 149 |

+

"151661": {

|

| 150 |

+

"content": "<|fim_suffix|>",

|

| 151 |

+

"lstrip": false,

|

| 152 |

+

"normalized": false,

|

| 153 |

+

"rstrip": false,

|

| 154 |

+

"single_word": false,

|

| 155 |

+

"special": false

|

| 156 |

+

},

|

| 157 |

+

"151662": {

|

| 158 |

+

"content": "<|fim_pad|>",

|

| 159 |

+

"lstrip": false,

|

| 160 |

+

"normalized": false,

|

| 161 |

+

"rstrip": false,

|

| 162 |

+

"single_word": false,

|

| 163 |

+

"special": false

|

| 164 |

+

},

|

| 165 |

+

"151663": {

|

| 166 |

+

"content": "<|repo_name|>",

|

| 167 |

+

"lstrip": false,

|

| 168 |

+

"normalized": false,

|

| 169 |

+

"rstrip": false,

|

| 170 |

+

"single_word": false,

|

| 171 |

+

"special": false

|

| 172 |

+

},

|

| 173 |

+

"151664": {

|

| 174 |

+

"content": "<|file_sep|>",

|

| 175 |

+

"lstrip": false,

|

| 176 |

+

"normalized": false,

|

| 177 |

+

"rstrip": false,

|

| 178 |

+

"single_word": false,

|

| 179 |

+

"special": false

|

| 180 |

+

},

|

| 181 |

+

"151665": {

|

| 182 |

+

"content": "<tool_response>",

|

| 183 |

+

"lstrip": false,

|

| 184 |

+

"normalized": false,

|

| 185 |

+

"rstrip": false,

|

| 186 |

+

"single_word": false,

|

| 187 |

+

"special": false

|

| 188 |

+

},

|

| 189 |

+

"151666": {

|

| 190 |

+

"content": "</tool_response>",

|

| 191 |

+

"lstrip": false,

|

| 192 |

+

"normalized": false,

|

| 193 |

+

"rstrip": false,

|

| 194 |

+

"single_word": false,

|

| 195 |

+

"special": false

|

| 196 |

+

},

|

| 197 |

+

"151667": {

|

| 198 |

+

"content": "<think>",

|

| 199 |

+

"lstrip": false,

|

| 200 |

+

"normalized": false,

|

| 201 |

+

"rstrip": false,

|

| 202 |

+

"single_word": false,

|

| 203 |

+

"special": false

|

| 204 |

+

},

|

| 205 |

+

"151668": {

|

| 206 |

+

"content": "</think>",

|

| 207 |

+

"lstrip": false,

|

| 208 |

+

"normalized": false,

|

| 209 |

+

"rstrip": false,

|

| 210 |

+

"single_word": false,

|

| 211 |

+

"special": false

|

| 212 |

+

}

|

| 213 |

+

},

|

| 214 |

+

"additional_special_tokens": [

|

| 215 |

+

"<|im_start|>",

|

| 216 |

+

"<|im_end|>",

|

| 217 |

+

"<|object_ref_start|>",

|

| 218 |

+

"<|object_ref_end|>",

|

| 219 |

+

"<|box_start|>",

|

| 220 |

+

"<|box_end|>",

|

| 221 |

+

"<|quad_start|>",

|

| 222 |

+

"<|quad_end|>",

|

| 223 |

+

"<|vision_start|>",

|

| 224 |

+

"<|vision_end|>",

|

| 225 |

+

"<|vision_pad|>",

|

| 226 |

+

"<|image_pad|>",

|

| 227 |

+

"<|video_pad|>"

|

| 228 |

+

],

|

| 229 |

+

"bos_token": null,

|

| 230 |

+

"chat_template": "{%- if tools %}\n {{- '<|im_start|>system\\n' }}\n {%- if messages[0].role == 'system' %}\n {%- if messages[0].content is string %}\n {{- messages[0].content }}\n {%- else %}\n {%- for content in messages[0].content %}\n {%- if 'text' in content %}\n {{- content.text }}\n {%- endif %}\n {%- endfor %}\n {%- endif %}\n {{- '\\n\\n' }}\n {%- endif %}\n {{- \"# Tools\\n\\nYou may call one or more functions to assist with the user query.\\n\\nYou are provided with function signatures within <tools></tools> XML tags:\\n<tools>\" }}\n {%- for tool in tools %}\n {{- \"\\n\" }}\n {{- tool | tojson }}\n {%- endfor %}\n {{- \"\\n</tools>\\n\\nFor each function call, return a json object with function name and arguments within <tool_call></tool_call> XML tags:\\n<tool_call>\\n{\\\"name\\\": <function-name>, \\\"arguments\\\": <args-json-object>}\\n</tool_call><|im_end|>\\n\" }}\n{%- else %}\n {%- if messages[0].role == 'system' %}\n {{- '<|im_start|>system\\n' }}\n {%- if messages[0].content is string %}\n {{- messages[0].content }}\n {%- else %}\n {%- for content in messages[0].content %}\n {%- if 'text' in content %}\n {{- content.text }}\n {%- endif %}\n {%- endfor %}\n {%- endif %}\n {{- '<|im_end|>\\n' }}\n {%- endif %}\n{%- endif %}\n{%- set image_count = namespace(value=0) %}\n{%- set video_count = namespace(value=0) %}\n{%- for message in messages %}\n {%- if message.role == \"user\" %}\n {{- '<|im_start|>' + message.role + '\\n' }}\n {%- if message.content is string %}\n {{- message.content }}\n {%- else %}\n {%- for content in message.content %}\n {%- if content.type == 'image' or 'image' in content or 'image_url' in content %}\n {%- set image_count.value = image_count.value + 1 %}\n {%- if add_vision_id %}Picture {{ image_count.value }}: {% endif -%}\n <|vision_start|><|image_pad|><|vision_end|>\n {%- elif content.type == 'video' or 'video' in content %}\n {%- set video_count.value = video_count.value + 1 %}\n {%- if add_vision_id %}Video {{ video_count.value }}: {% endif -%}\n <|vision_start|><|video_pad|><|vision_end|>\n {%- elif 'text' in content %}\n {{- content.text }}\n {%- endif %}\n {%- endfor %}\n {%- endif %}\n {{- '<|im_end|>\\n' }}\n {%- elif message.role == \"assistant\" %}\n {{- '<|im_start|>' + message.role + '\\n' }}\n {%- if message.content is string %}\n {{- message.content }}\n {%- else %}\n {%- for content_item in message.content %}\n {%- if 'text' in content_item %}\n {{- content_item.text }}\n {%- endif %}\n {%- endfor %}\n {%- endif %}\n {%- if message.tool_calls %}\n {%- for tool_call in message.tool_calls %}\n {%- if (loop.first and message.content) or (not loop.first) %}\n {{- '\\n' }}\n {%- endif %}\n {%- if tool_call.function %}\n {%- set tool_call = tool_call.function %}\n {%- endif %}\n {{- '<tool_call>\\n{\"name\": \"' }}\n {{- tool_call.name }}\n {{- '\", \"arguments\": ' }}\n {%- if tool_call.arguments is string %}\n {{- tool_call.arguments }}\n {%- else %}\n {{- tool_call.arguments | tojson }}\n {%- endif %}\n {{- '}\\n</tool_call>' }}\n {%- endfor %}\n {%- endif %}\n {{- '<|im_end|>\\n' }}\n {%- elif message.role == \"tool\" %}\n {%- if loop.first or (messages[loop.index0 - 1].role != \"tool\") %}\n {{- '<|im_start|>user' }}\n {%- endif %}\n {{- '\\n<tool_response>\\n' }}\n {%- if message.content is string %}\n {{- message.content }}\n {%- else %}\n {%- for content in message.content %}\n {%- if content.type == 'image' or 'image' in content or 'image_url' in content %}\n {%- set image_count.value = image_count.value + 1 %}\n {%- if add_vision_id %}Picture {{ image_count.value }}: {% endif -%}\n <|vision_start|><|image_pad|><|vision_end|>\n {%- elif content.type == 'video' or 'video' in content %}\n {%- set video_count.value = video_count.value + 1 %}\n {%- if add_vision_id %}Video {{ video_count.value }}: {% endif -%}\n <|vision_start|><|video_pad|><|vision_end|>\n {%- elif 'text' in content %}\n {{- content.text }}\n {%- endif %}\n {%- endfor %}\n {%- endif %}\n {{- '\\n</tool_response>' }}\n {%- if loop.last or (messages[loop.index0 + 1].role != \"tool\") %}\n {{- '<|im_end|>\\n' }}\n {%- endif %}\n {%- endif %}\n{%- endfor %}\n{%- if add_generation_prompt %}\n {{- '<|im_start|>assistant\\n' }}\n{%- endif %}\n",

|

| 231 |

+

"clean_up_tokenization_spaces": false,

|

| 232 |

+

"eos_token": "<|im_end|>",

|

| 233 |

+

"errors": "replace",

|

| 234 |

+

"model_max_length": 262144,

|

| 235 |

+

"pad_token": "<|endoftext|>",

|

| 236 |

+

"split_special_tokens": false,

|

| 237 |

+

"tokenizer_class": "Qwen2Tokenizer",

|

| 238 |

+

"unk_token": null

|

| 239 |

+

}

|

video_preprocessor_config.json

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"size": {

|

| 3 |

+

"longest_edge": 25165824,

|

| 4 |

+

"shortest_edge": 4096

|

| 5 |

+

},

|

| 6 |

+

"patch_size": 16,

|

| 7 |

+

"temporal_patch_size": 2,

|

| 8 |

+

"merge_size": 2,

|

| 9 |

+

"image_mean": [

|

| 10 |

+

0.5,

|

| 11 |

+

0.5,

|

| 12 |

+

0.5

|

| 13 |

+

],

|

| 14 |

+

"image_std": [

|

| 15 |

+

0.5,

|

| 16 |

+

0.5,

|

| 17 |

+

0.5

|

| 18 |

+

],

|

| 19 |

+

"processor_class": "Qwen3VLProcessor",

|

| 20 |

+

"video_processor_type": "Qwen3VLVideoProcessor"

|

| 21 |

+

}

|

vocab.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|