LongVILA-R1-7B

Introduction:

LongVILA-R1-7B demonstrates strong performance in long video reasoning, achieving 70.7% on VideoMME (w/ sub.) and surpassing Gemini-1.5-Pro across diverse reasoning tasks. Long-RL is a codebase that accelerates long video RL training by up to 2.1× through its MR-SP system. It supports RL training on image, video, and omni inputs across VILA, Qwen/Qwen-VL, and diffusion models.

Key Enhancements:

LongVILA-R1-7B supports both multiple-choice questions and open-ended questions.

LongVILA-R1-7B demonstrates strong performance in long video reasoning, achieving 70.7% on VideoMME (w/ sub.) and surpassing Gemini-1.5-Pro across diverse reasoning tasks.

Long-RL is a codebase that accelerates long video RL training by up to 2.1× through its MR-SP system. It supports RL training on image, video, and omni inputs across VILA, Qwen/Qwen-VL, and diffusion models.

Evaluation:

LongVideo-Reason-eval

| Models | Temporal | Goal | Plot | Spatial | Overall |

|---|---|---|---|---|---|

| LongVILA-R1-7B | 68.1 | 85.7 | 70.6 | 53.3 | 72.0 |

VideoMME

| Models | VideoMME (w/o sub) | VideoMME (w sub) | VideoMME (w sub) | VideoMME (w sub) | VideoMME (w sub) | VideoMME (w sub) | VideoMME (w sub) |

|---|---|---|---|---|---|---|---|

| LongVILA-R1-7B | 65.0 | 70.7 | 70.7 | 70.7 | 70.7 | 70.7 | 70.7 |

Installation

Dependency setups:

git clone https://github.com/NVlabs/Long-RL.git

cd Long-RL

pip install -e .

If you want to train Qwen-Omni models, please

bash vllm_replace.sh

Usage

from transformers import AutoConfig, AutoModel,GenerationConfig

from termcolor import colored

model_path = "Efficient-Large-Model/LongVILA-R1-7B"

# you can use config

config = AutoConfig.from_pretrained(model_path, trust_remote_code=True)

model = AutoModel.from_config(config, trust_remote_code=True)

# or directly from_pretrained

model = AutoModel.from_pretrained(model_path, trust_remote_code=True, device_map="auto")

generation_config = GenerationConfig(

max_new_tokens=1024,

do_sample=False,

temperature=1, # HACK

num_return_sequences=1,

)

prompt = ""

video_path = ""

message = [

prompt,

{"path": video_path}

]

# examples generate with video

response = model.generate_content(message, generation_config=generation_config)

print(colored(response, "cyan", attrs=["bold"]))

VILA Model Card

Model details

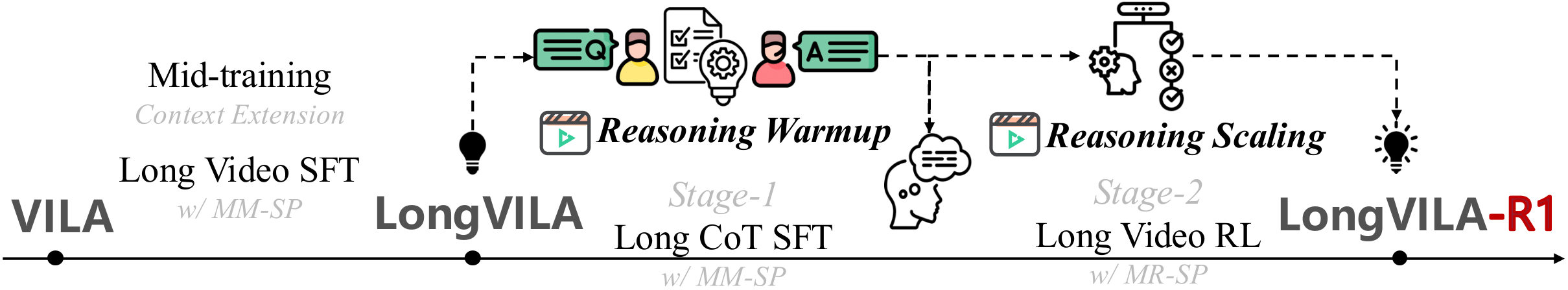

Model type: We introduce a full-stack framework that scales up reasoning in vision-language models (VLMs) to long videos, leveraging reinforcement learning. We addresses the unique challenges of long video reasoning by integrating three critical components: (1) a large-scale dataset, LongVideo-Reason, comprising 52K long video QA pairs with labeled high-quality reasoning annotations across diverse domains such as sports, games, and vlogs; (2) a two-stage training pipeline that extends VLMs with chain-of-thought supervised fine-tuning (CoT-SFT) and reinforcement learning (RL); and (3) a training infrastructure for long video RL, named Multi-modal Reinforcement Sequence Parallelism (MR-SP), which incorporates sequence parallelism and a vLLM-based engine tailored for long video, using cached video embeddings for efficient rollout and prefilling. Notably, our MR-SP system achieves up to 2.1x speedup on long video RL training. Model date: LongVILA-R1 was trained in July 2025.

Paper or resources for more information: https://github.com/NVLabs/Long-RL

@misc{long-rl,

title = {Long-RL: Scaling RL to Long Sequences},

author = {Yukang Chen, Wei Huang, Shuai Yang, Qinghao Hu, Baifeng Shi, Hanrong Ye, Ligeng Zhu, Zhijian Liu, Pavlo Molchanov, Jan Kautz, Xiaojuan Qi, Sifei Liu,Hongxu Yin, Yao Lu, Song Han},

year = {2025},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/NVlabs/Long-RL}},

}

@article{chen2025longvila-r1,

title={Scaling RL to Long Videos},

author={Yukang Chen and Wei Huang and Baifeng Shi and Qinghao Hu and Hanrong Ye and Ligeng Zhu and Zhijian Liu and Pavlo Molchanov and Jan Kautz and Xiaojuan Qi and Sifei Liu and Hongxu Yin and Yao Lu and Song Han},

year={2025},

eprint={2507.07966},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@inproceedings{chen2024longvila,

title={LongVILA: Scaling Long-Context Visual Language Models for Long Videos},

author={Yukang Chen and Fuzhao Xue and Dacheng Li and Qinghao Hu and Ligeng Zhu and Xiuyu Li and Yunhao Fang and Haotian Tang and Shang Yang and Zhijian Liu and Ethan He and Hongxu Yin and Pavlo Molchanov and Jan Kautz and Linxi Fan and Yuke Zhu and Yao Lu and Song Han},

booktitle={The International Conference on Learning Representations (ICLR)},

year={2025},

}

License

- The code is released under the Apache 2.0 license as found in the LICENSE file.

- The pretrained weights are released under the CC-BY-NC-SA-4.0 license.

- The service is a research preview intended for non-commercial use only, and is subject to the following licenses and terms:

- Terms of Use of the data generated by OpenAI

- Dataset Licenses for each one used during training.

Where to send questions or comments about the model: https://github.com/NVLabs/Long-RL/issues

Intended use

Primary intended uses: The primary use of LongVILA-R1 is research on large multimodal models and chatbots.

Primary intended users: The primary intended users of the model are researchers and hobbyists in computer vision, natural language processing, machine learning, and artificial intelligence.

Input:

Input Type: Video Input Format: MP4 Input Parameters: 2D, 3D

Output:

Output Type: Text Output Format: String

[Preferred/Supported] Operating System(s):

Linux

Inference:

Engine: [Tensor(RT), Triton, Or List Other Here]

- PyTorch

Test Hardware:

- A100

- H100

- A6000

Ethical Considerations

NVIDIA believes Trustworthy AI is a shared responsibility and we have established policies and practices to enable development for a wide array of AI applications. When downloaded or used in accordance with our terms of service, developers should work with their internal model team to ensure this model meets requirements for the relevant industry and use case and addresses unforeseen product misuse.

- Downloads last month

- 3