SentenceTransformer based on BAAI/bge-m3

This is a sentence-transformers model finetuned from BAAI/bge-m3. It maps sentences & paragraphs to a 1024-dimensional dense vector space and can be used for semantic textual similarity, semantic search, paraphrase mining, text classification, clustering, and more.

Model Details

Model Description

- Model Type: Sentence Transformer

- Base model: BAAI/bge-m3

- Maximum Sequence Length: 8192 tokens

- Output Dimensionality: 1024 dimensions

- Similarity Function: Cosine Similarity

Model Sources

- Documentation: Sentence Transformers Documentation

- Repository: Sentence Transformers on GitHub

- Hugging Face: Sentence Transformers on Hugging Face

Full Model Architecture

SentenceTransformer(

(0): Transformer({'max_seq_length': 8192, 'do_lower_case': False, 'architecture': 'XLMRobertaModel'})

(1): Pooling({'word_embedding_dimension': 1024, 'pooling_mode_cls_token': True, 'pooling_mode_mean_tokens': False, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False, 'pooling_mode_weightedmean_tokens': False, 'pooling_mode_lasttoken': False, 'include_prompt': True})

(2): Normalize()

)

Usage

Direct Usage (Sentence Transformers)

First install the Sentence Transformers library:

pip install -U sentence-transformers

Then you can load this model and run inference.

from sentence_transformers import SentenceTransformer

# Download from the 🤗 Hub

model = SentenceTransformer("aaa961/finetuned-bge-m3-base-en")

# Run inference

sentences = [

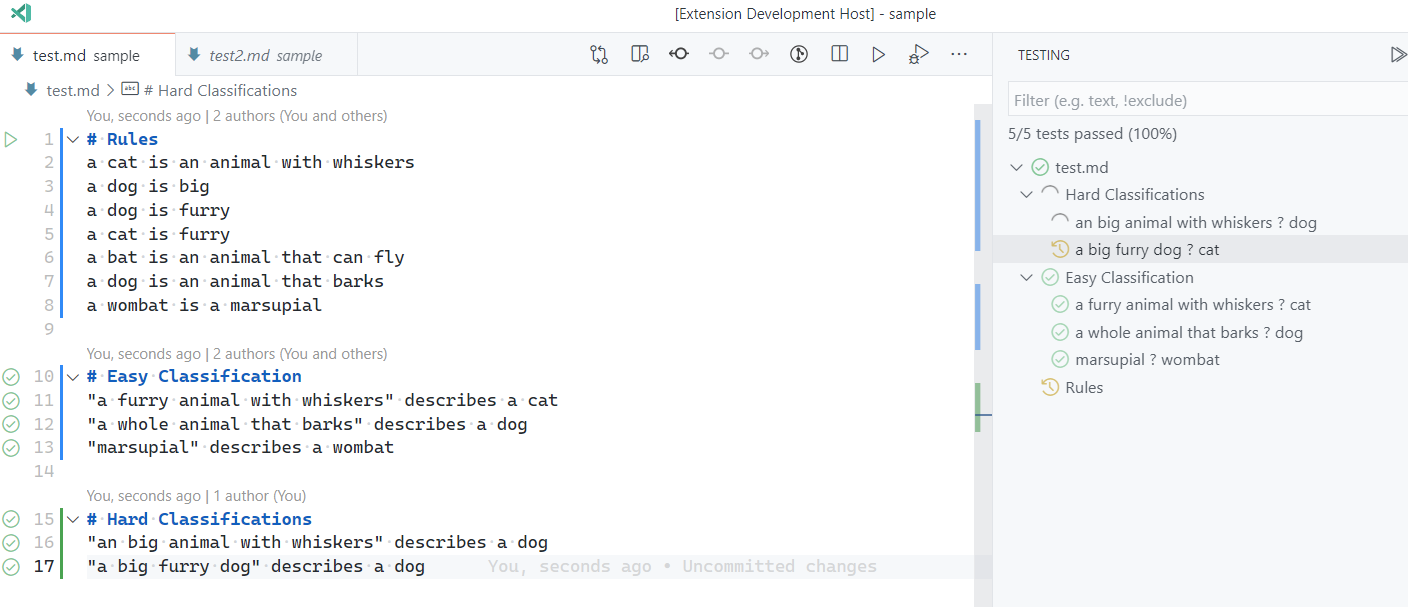

'Shell integration: bash and zsh don\'t serialize \\n and ; characters Part of https://github.com/microsoft/vscode/issues/155639\r\n\r\nRepro:\r\n\r\n1. Open a bash or zsh session\r\n2. Run:\r\n ```sh\r\n echo "a\r\n … b"\r\n ```\r\n \r\n3. ctrl+alt+r to run recent command, select the last command, 🐛 it\'s run without the new line\r\n \r\n',

'TreeView state out of sync Testing #117304\r\n\r\nRepro: Not Sure\r\n\r\nTest state shows passed in file but still running in tree view.\r\n\r\n\r\n',

'Setting icon and color in createTerminal API no longer works correctly See https://github.com/fabiospampinato/vscode-terminals/issues/77\r\n\r\nLooks like the default tab color/icon change probably regressed this.\r\n\r\n',

]

embeddings = model.encode(sentences)

print(embeddings.shape)

# [3, 1024]

# Get the similarity scores for the embeddings

similarities = model.similarity(embeddings, embeddings)

print(similarities)

# tensor([[1.0000, 0.4264, 0.4315],

# [0.4264, 1.0000, 0.4278],

# [0.4315, 0.4278, 1.0000]])

Evaluation

Metrics

Triplet

- Dataset:

bge-base-en-train - Evaluated with

TripletEvaluator

| Metric | Value |

|---|---|

| cosine_accuracy | 1.0 |

Triplet

- Dataset:

bge-base-en-train - Evaluated with

TripletEvaluator

| Metric | Value |

|---|---|

| cosine_accuracy | 0.9524 |

Training Details

Training Dataset

Unnamed Dataset

- Size: 336 training samples

- Columns:

textsandlabel - Approximate statistics based on the first 336 samples:

texts label type string int details - min: 12 tokens

- mean: 340.9 tokens

- max: 996 tokens

- 0: ~1.19%

- 5: ~0.60%

- 7: ~0.60%

- 8: ~0.60%

- 9: ~0.60%

- 10: ~0.60%

- 12: ~0.60%

- 13: ~0.60%

- 15: ~0.60%

- 16: ~0.60%

- 17: ~0.60%

- 19: ~0.60%

- 20: ~0.60%

- 21: ~0.60%

- 25: ~0.60%

- 26: ~0.60%

- 27: ~0.60%

- 28: ~0.60%

- 29: ~0.89%

- 30: ~0.89%

- 31: ~2.08%

- 33: ~1.49%

- 34: ~0.60%

- 36: ~0.60%

- 37: ~0.89%

- 38: ~0.60%

- 42: ~0.89%

- 43: ~1.19%

- 45: ~0.60%

- 47: ~0.60%

- 48: ~0.60%

- 49: ~0.60%

- 50: ~0.60%

- 51: ~0.60%

- 52: ~1.19%

- 53: ~0.60%

- 55: ~0.60%

- 57: ~1.19%

- 59: ~0.60%

- 60: ~0.60%

- 61: ~0.60%

- 62: ~0.60%

- 63: ~0.60%

- 64: ~0.89%

- 65: ~0.60%

- 67: ~0.60%

- 68: ~0.89%

- 69: ~0.60%

- 70: ~0.60%

- 71: ~0.60%

- 72: ~0.60%

- 73: ~0.60%

- 74: ~0.60%

- 75: ~0.60%

- 77: ~0.60%

- 78: ~0.60%

- 82: ~0.89%

- 84: ~0.60%

- 85: ~0.60%

- 86: ~0.60%

- 87: ~0.60%

- 88: ~0.60%

- 89: ~0.60%

- 90: ~0.60%

- 91: ~0.60%

- 92: ~0.89%

- 93: ~0.60%

- 95: ~0.60%

- 96: ~0.60%

- 97: ~0.60%

- 98: ~0.60%

- 99: ~0.60%

- 100: ~0.60%

- 101: ~0.60%

- 103: ~0.60%

- 105: ~0.60%

- 108: ~0.60%

- 109: ~0.60%

- 110: ~0.89%

- 113: ~0.60%

- 115: ~0.60%

- 116: ~0.60%

- 117: ~0.60%

- 118: ~0.60%

- 119: ~0.60%

- 120: ~1.49%

- 121: ~0.60%

- 126: ~0.60%

- 127: ~0.60%

- 130: ~0.60%

- 131: ~0.60%

- 134: ~0.60%

- 135: ~0.60%

- 136: ~0.60%

- 137: ~0.60%

- 138: ~0.60%

- 139: ~0.60%

- 140: ~0.60%

- 143: ~0.60%

- 144: ~0.60%

- 147: ~0.60%

- 148: ~0.60%

- 149: ~0.60%

- 150: ~0.60%

- 152: ~0.60%

- 153: ~0.60%

- 154: ~0.60%

- 155: ~0.60%

- 157: ~0.60%

- 158: ~0.60%

- 159: ~0.60%

- 160: ~0.60%

- 161: ~0.60%

- 163: ~0.60%

- 164: ~0.60%

- 165: ~0.60%

- 168: ~0.60%

- 170: ~0.60%

- 171: ~0.89%

- 174: ~0.89%

- 175: ~0.60%

- 176: ~0.60%

- 177: ~0.60%

- 178: ~0.60%

- 179: ~0.60%

- 180: ~0.60%

- 181: ~0.60%

- 182: ~0.60%

- 183: ~0.60%

- 185: ~0.60%

- 186: ~0.60%

- 187: ~0.60%

- 192: ~0.60%

- 193: ~0.60%

- 194: ~0.60%

- 195: ~0.89%

- 196: ~0.60%

- 197: ~0.60%

- 198: ~0.60%

- 199: ~0.60%

- 202: ~0.60%

- 204: ~0.60%

- 205: ~0.89%

- 207: ~0.60%

- 208: ~0.60%

- 209: ~0.60%

- 210: ~0.60%

- 213: ~0.60%

- 214: ~0.60%

- 215: ~0.60%

- 216: ~0.60%

- 218: ~0.60%

- Samples:

texts label Branch list is sometimes out of order

Type: Bug

1. Open a workspace

2. Quickly open the branch picker and typemain

Bug

The first time you do this, sometimes you end up with an unordered list:

The correct order shows up when you keep start typing or try doing this again:

VS Code version: Code - Insiders 1.91.0-insider (Universal) (0354163c1c66b950b0762364f5b4cd37937b624a, 2024-06-26T10:12:33.304Z)

OS version: Darwin arm64 23.5.0

Modes:System Info

|Item|Value|

|---|---|

|CPUs|Apple M2 Max (12 x 2400)|

|GPU Status|2d_canvas: unavailable_software

canvas_oop_rasterization: disabled_off

direct_rendering_display_compositor: disabled_off_ok

gpu_compositing: disabled_software

multiple_raster_threads: enabled_on

ope...218Git Branch Picker Race Condition If I paste the branch too quickly and then press enter, it does not switch to it, but creates a new branch.

This breaks muscle memory, as it works when you do it slowly.

Once loading completes, it should select the branch again.218links aren't discoverable to screen reader users in markdown documents They're only discoverable via visual distinction and the action that can be taken (IE opening them) is only indicated in the tooltip AFAICT.

https://github.com/microsoft/vscode/assets/29464607/09d28b81-c2cc-4477-b1fc-7b1de1baae74177 - Loss:

BatchSemiHardTripletLoss

Evaluation Dataset

Unnamed Dataset

- Size: 70 evaluation samples

- Columns:

textsandlabel - Approximate statistics based on the first 70 samples:

texts label type string int details - min: 58 tokens

- mean: 303.57 tokens

- max: 864 tokens

- 1: ~2.86%

- 2: ~2.86%

- 6: ~2.86%

- 11: ~5.71%

- 14: ~2.86%

- 23: ~2.86%

- 32: ~5.71%

- 35: ~2.86%

- 39: ~2.86%

- 40: ~2.86%

- 46: ~2.86%

- 54: ~2.86%

- 83: ~2.86%

- 102: ~2.86%

- 104: ~4.29%

- 111: ~2.86%

- 122: ~2.86%

- 123: ~2.86%

- 125: ~2.86%

- 145: ~2.86%

- 146: ~2.86%

- 162: ~2.86%

- 166: ~2.86%

- 169: ~2.86%

- 184: ~2.86%

- 188: ~2.86%

- 190: ~2.86%

- 200: ~2.86%

- 201: ~4.29%

- 203: ~2.86%

- 206: ~2.86%

- 217: ~2.86%

- Samples:

- Downloads last month

- 2

Model tree for aaa961/finetuned-bge-m3-base-en

Base model

BAAI/bge-m3Evaluation results

- Cosine Accuracy on bge base en trainself-reported1.000

- Cosine Accuracy on bge base en trainself-reported0.952