metadata

license: apache-2.0

language:

- en

base_model:

- Ultralytics/YOLO11

tags:

- yolo

- yolo11

- nsfw

pipeline_tag: object-detection

EraX-NSFW-V1.0

A Highly Efficient Model for NSFW Detection

Developed by:

- Phạm Đình Thục ([email protected])

- Mr. Nguyễn Anh Nguyên ([email protected])

License: Apache 2.0

Model Details / Overview

- Model Architecture: YOLO11m

- Task: Object Detection (NSFW Detection)

- Dataset: Private datasets (from Internet).

- Training set: 31890 images.

- Validation set: 11538 images.

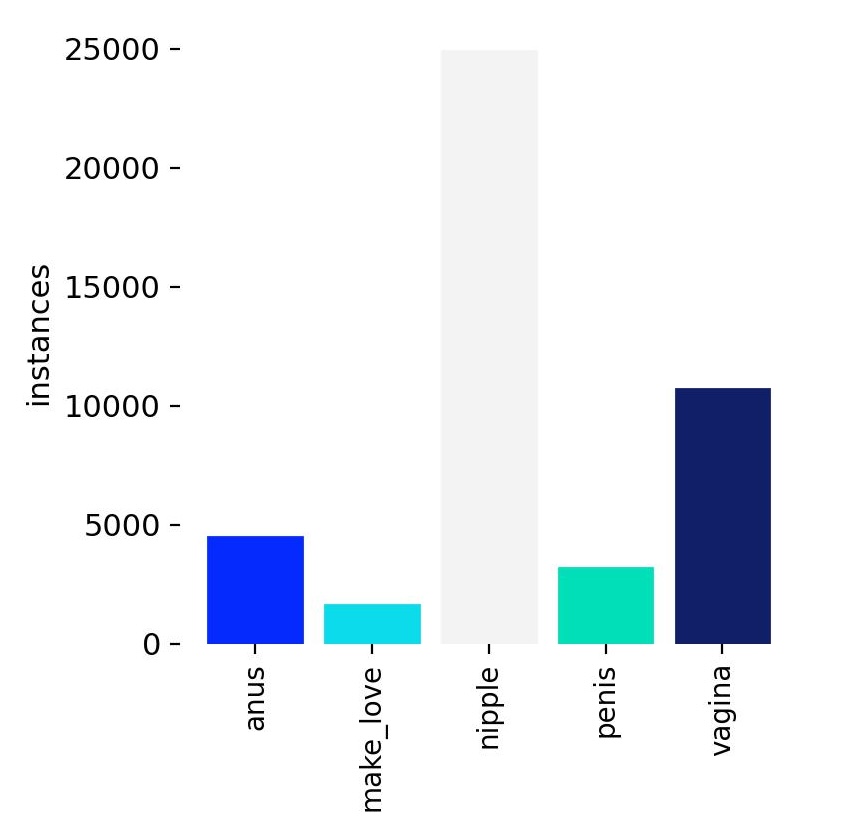

- Classes: anus, make_love, nipple, penis, vagina.

Labels

Training Configuration

- Model Weights File:

erax_nsfw_yolo11m.pt - Number of Epochs: 100

- Learning Rate: 0.01

- Batch Size: 208

- Image Size: 640x640

- Training server: 8 x NVIDIA RTX A4000 (16GB GDDR6)

- Training time: ~10 hours

Evaluation Metrics

Below are the key metrics from the model evaluation on the validation set:

- Precision: 0.726

- Recall: 0.68

- mAP50: 0.724

- mAP50-95: 0.434

| Format | Status | Size (MB) | metrics/mAP50-95(B) | Inference time (ms/im) | FPS |

|---|---|---|---|---|---|

| PyTorch | ✅ | 38.6 | 0.4332 | 16.97 | 58.91 |

| TorchScript | ✅ | 77 | 0.4153 | 12.09 | 82.69 |

| ONNX | ✅ | 76.7 | 0.4153 | 103.94 | 9.62 |

| OpenVINO | ❌ | - | - | - | - |

| TensorRT | ✅ | 89.6 | 0.4155 | 7 | 142.92 |

| CoreML | ❌ | - | - | - | - |

| TensorFlow SavedModel | ✅ | 192.3 | 0.4153 | 40.19 | 24.88 |

| TensorFlow GraphDef | ✅ | 76.8 | 0.4153 | 36.71 | 27.24 |

| TensorFlow Lite | ❌ | - | - | - | - |

| TensorFlow Edge TPU | ❌ | - | - | - | - |

| TensorFlow.js | ❌ | - | - | - | - |

| PaddlePaddle | ✅ | 153.3 | 0.4153 | 1024.24 | 0.98 |

| NCNN | ✅ | 76.6 | 0.4153 | 187.36 | 5.34 |

Training Validation Results

Training and Validation Losses

Confusion Matrix

Inference

To use the trained model, follow these steps:

- Install the necessary packages:

pip install ultralytics supervision

- Simple Use Case:

from ultralytics import YOLO

from PIL import Image

import supervision as sv

IOU_THRESHOLD = 0.3

CONFIDENCE_THRESHOLD = 0.2

KERNEL_SIZE = 20

pretrained_path = "erax_nsfw_yolo11m.pt"

image_path_list = ["img_0.jpg", "img_1.jpg"]

model = YOLO(pretrained_path)

results = model(image_path_list,

conf=CONFIDENCE_THRESHOLD,

iou=IOU_THRESHOLD

)

for result in results:

annotated_image = result.orig_img.copy()

selected_classes = [0, 2, 3, 4, 5] # hidden make_love class

detections = sv.Detections.from_ultralytics(result)

detections = detections[np.isin(detections.class_id, selected_classes)]

box_annotator = sv.BoxAnnotator()

blur_annotator = sv.BlurAnnotator(kernel_size=KERNEL_SIZE)

label_annotator = sv.LabelAnnotator(text_color=sv.Color.BLACK)

annotated_image = blur_annotator.annotate(

annotated_image.copy(),

detections=detections

)

annotated_image = box_annotator.annotate(

annotated_image,

detections=detections

)

annotated_image = label_annotator.annotate(

annotated_image,

detections=detections

)

sv.plot_image(annotated_image, size=(10, 10))

Training

Scripts for training: https://github.com/EraX-JS-Company/EraX-NSFW-V1.0

Examples

Citation

If you find our project useful, we would appreciate it if you could star our repository and cite our work as follows:

@article{EraX-NSFW-V1.0,

author = {Phạm Đình Thục and

Mr. Nguyễn Anh Nguyên and

Đoàn Thành Khang and

Mr. Trần Hải Khương and

Mr. Trương Công Đức and

Mr. Phan Nguyễn Tuấn Kha and

Phạm Huỳnh Nhật},

title = {EraX-NSFW-V1.0: A Highly Efficient Model for NSFW Detection},

organization={EraX JS Company},

year={2024},

url={https://huggingface.co/erax-ai/EraX-NSFW-V1.0}

}