Runtimerror: "You are attempting to use Flash Attention 2.0 with a model not initialized on GPU."

Hello, I encountered an error. Can you help me?

from sentence_transformers import SentenceTransformer

# 1. Load a pretrained Sentence Transformer model

model = SentenceTransformer("/data/models/jina-embeddings-v4", trust_remote_code=True)

# The sentences to encode

sentences = [

"The weather is lovely today.",

"It's so sunny outside!",

"He drove to the stadium.",

]

# 2. Calculate embeddings by calling model.encode()

embeddings = model.encode(sentences)

print(embeddings.shape)

# [3, 384]

# 3. Calculate the embedding similarities

similarities = model.similarity(embeddings, embeddings)

print(similarities)

# tensor([[1.0000, 0.6660, 0.1046],

# [0.6660, 1.0000, 0.1411],

# [0.1046, 0.1411, 1.0000]])

When i run the above, it shows the following runtime:

You are attempting to use Flash Attention 2.0 with a model not initialized on GPU. Make sure to move the model to GPU after initializing it on CPU with `model.to('cuda')`.

---------------------------------------------------------------------------

Exception Traceback (most recent call last)

Cell In[1], line 4

1 from sentence_transformers import SentenceTransformer

3 # 1. Load a pretrained Sentence Transformer model

----> 4 model = SentenceTransformer("/data/models/jina-embeddings-v4", trust_remote_code=True)

6 # The sentences to encode

7 sentences = [

8 "The weather is lovely today.",

9 "It's so sunny outside!",

10 "He drove to the stadium.",

11 ]

File /opt/anaconda3/lib/python3.11/site-packages/sentence_transformers/SentenceTransformer.py:309, in SentenceTransformer.__init__(self, model_name_or_path, modules, device, prompts, default_prompt_name, similarity_fn_name, cache_folder, trust_remote_code, revision, local_files_only, token, use_auth_token, truncate_dim, model_kwargs, tokenizer_kwargs, config_kwargs, model_card_data, backend)

300 model_name_or_path = __MODEL_HUB_ORGANIZATION__ + "/" + model_name_or_path

302 if is_sentence_transformer_model(

303 model_name_or_path,

304 token,

(...)

307 local_files_only=local_files_only,

308 ):

--> 309 modules, self.module_kwargs = self._load_sbert_model(

310 model_name_or_path,

311 token=token,

312 cache_folder=cache_folder,

313 revision=revision,

314 trust_remote_code=trust_remote_code,

315 local_files_only=local_files_only,

316 model_kwargs=model_kwargs,

317 tokenizer_kwargs=tokenizer_kwargs,

318 config_kwargs=config_kwargs,

319 )

320 else:

321 modules = self._load_auto_model(

322 model_name_or_path,

323 token=token,

(...)

330 config_kwargs=config_kwargs,

331 )

File /opt/anaconda3/lib/python3.11/site-packages/sentence_transformers/SentenceTransformer.py:1808, in SentenceTransformer._load_sbert_model(self, model_name_or_path, token, cache_folder, revision, trust_remote_code, local_files_only, model_kwargs, tokenizer_kwargs, config_kwargs)

1805 # Try to initialize the module with a lot of kwargs, but only if the module supports them

1806 # Otherwise we fall back to the load method

1807 try:

-> 1808 module = module_class(model_name_or_path, cache_dir=cache_folder, backend=self.backend, **kwargs)

1809 except TypeError:

1810 module = module_class.load(model_name_or_path)

File /data/cache/huggingface/modules/transformers_modules/jina-embeddings-v4/custom_st.py:45, in Transformer.__init__(self, model_name_or_path, max_seq_length, config_args, model_args, tokenizer_args, cache_dir, backend, **kwargs)

40 if self.default_task and self.default_task not in self.config.task_names:

41 raise ValueError(

42 f"Invalid task: {self.default_task}. Must be one of {self.config.task_names}."

43 )

---> 45 self.model = AutoModel.from_pretrained(

46 model_name_or_path, config=self.config, cache_dir=cache_dir, **model_kwargs

47 )

48 self.processor = AutoProcessor.from_pretrained(

49 model_name_or_path,

50 cache_dir=cache_dir,

51 use_fast=True,

52 **tokenizer_kwargs,

53 )

54 self.max_seq_length = max_seq_length or 8192

File /opt/anaconda3/lib/python3.11/site-packages/transformers/models/auto/auto_factory.py:564, in _BaseAutoModelClass.from_pretrained(cls, pretrained_model_name_or_path, *model_args, **kwargs)

562 cls.register(config.__class__, model_class, exist_ok=True)

563 model_class = add_generation_mixin_to_remote_model(model_class)

--> 564 return model_class.from_pretrained(

565 pretrained_model_name_or_path, *model_args, config=config, **hub_kwargs, **kwargs

566 )

567 elif type(config) in cls._model_mapping.keys():

568 model_class = _get_model_class(config, cls._model_mapping)

File /data/cache/huggingface/modules/transformers_modules/jina-embeddings-v4/modeling_jina_embeddings_v4.py:565, in JinaEmbeddingsV4Model.from_pretrained(cls, pretrained_model_name_or_path, *args, **kwargs)

562 if not is_flash_attn_2_available():

563 kwargs["attn_implementation"] = "sdpa"

--> 565 base_model = super().from_pretrained(

566 pretrained_model_name_or_path, *args, **kwargs

567 )

569 # Configure adapter directory

570 if os.path.isdir(base_model.name_or_path):

File /opt/anaconda3/lib/python3.11/site-packages/transformers/modeling_utils.py:309, in restore_default_torch_dtype.<locals>._wrapper(*args, **kwargs)

307 old_dtype = torch.get_default_dtype()

308 try:

--> 309 return func(*args, **kwargs)

310 finally:

311 torch.set_default_dtype(old_dtype)

File /opt/anaconda3/lib/python3.11/site-packages/transformers/modeling_utils.py:4508, in PreTrainedModel.from_pretrained(cls, pretrained_model_name_or_path, config, cache_dir, ignore_mismatched_sizes, force_download, local_files_only, token, revision, use_safetensors, weights_only, *model_args, **kwargs)

4499 config = cls._autoset_attn_implementation(

4500 config,

4501 use_flash_attention_2=use_flash_attention_2,

4502 torch_dtype=torch_dtype,

4503 device_map=device_map,

4504 )

4506 with ContextManagers(model_init_context):

4507 # Let's make sure we don't run the init function of buffer modules

-> 4508 model = cls(config, *model_args, **model_kwargs)

4510 # Make sure to tie the weights correctly

4511 model.tie_weights()

File /data/cache/huggingface/modules/transformers_modules/jina-embeddings-v4/modeling_jina_embeddings_v4.py:145, in JinaEmbeddingsV4Model.__init__(self, config)

143 self._init_projection_layer(config)

144 self.post_init()

--> 145 self.processor = JinaEmbeddingsV4Processor.from_pretrained(

146 self.name_or_path, trust_remote_code=True, use_fast=True

147 )

148 self.multi_vector_projector_dim = config.multi_vector_projector_dim

149 self._task = None

File /opt/anaconda3/lib/python3.11/site-packages/transformers/processing_utils.py:1185, in ProcessorMixin.from_pretrained(cls, pretrained_model_name_or_path, cache_dir, force_download, local_files_only, token, revision, **kwargs)

1182 if token is not None:

1183 kwargs["token"] = token

-> 1185 args = cls._get_arguments_from_pretrained(pretrained_model_name_or_path, **kwargs)

1186 processor_dict, kwargs = cls.get_processor_dict(pretrained_model_name_or_path, **kwargs)

1187 return cls.from_args_and_dict(args, processor_dict, **kwargs)

File /opt/anaconda3/lib/python3.11/site-packages/transformers/processing_utils.py:1248, in ProcessorMixin._get_arguments_from_pretrained(cls, pretrained_model_name_or_path, **kwargs)

1245 else:

1246 attribute_class = cls.get_possibly_dynamic_module(class_name)

-> 1248 args.append(attribute_class.from_pretrained(pretrained_model_name_or_path, **kwargs))

1249 return args

File /opt/anaconda3/lib/python3.11/site-packages/transformers/tokenization_utils_base.py:2025, in PreTrainedTokenizerBase.from_pretrained(cls, pretrained_model_name_or_path, cache_dir, force_download, local_files_only, token, revision, trust_remote_code, *init_inputs, **kwargs)

2022 else:

2023 logger.info(f"loading file {file_path} from cache at {resolved_vocab_files[file_id]}")

-> 2025 return cls._from_pretrained(

2026 resolved_vocab_files,

2027 pretrained_model_name_or_path,

2028 init_configuration,

2029 *init_inputs,

2030 token=token,

2031 cache_dir=cache_dir,

2032 local_files_only=local_files_only,

2033 _commit_hash=commit_hash,

2034 _is_local=is_local,

2035 trust_remote_code=trust_remote_code,

2036 **kwargs,

2037 )

File /opt/anaconda3/lib/python3.11/site-packages/transformers/tokenization_utils_base.py:2278, in PreTrainedTokenizerBase._from_pretrained(cls, resolved_vocab_files, pretrained_model_name_or_path, init_configuration, token, cache_dir, local_files_only, _commit_hash, _is_local, trust_remote_code, *init_inputs, **kwargs)

2276 # Instantiate the tokenizer.

2277 try:

-> 2278 tokenizer = cls(*init_inputs, **init_kwargs)

2279 except import_protobuf_decode_error():

2280 logger.info(

2281 "Unable to load tokenizer model from SPM, loading from TikToken will be attempted instead."

2282 "(Google protobuf error: Tried to load SPM model with non-SPM vocab file).",

2283 )

File /opt/anaconda3/lib/python3.11/site-packages/transformers/models/qwen2/tokenization_qwen2_fast.py:120, in Qwen2TokenizerFast.__init__(self, vocab_file, merges_file, tokenizer_file, unk_token, bos_token, eos_token, pad_token, **kwargs)

109 unk_token = (

110 AddedToken(unk_token, lstrip=False, rstrip=False, special=True, normalized=False)

111 if isinstance(unk_token, str)

112 else unk_token

113 )

114 pad_token = (

115 AddedToken(pad_token, lstrip=False, rstrip=False, special=True, normalized=False)

116 if isinstance(pad_token, str)

117 else pad_token

118 )

--> 120 super().__init__(

121 vocab_file=vocab_file,

122 merges_file=merges_file,

123 tokenizer_file=tokenizer_file,

124 unk_token=unk_token,

125 bos_token=bos_token,

126 eos_token=eos_token,

127 pad_token=pad_token,

128 **kwargs,

129 )

File /opt/anaconda3/lib/python3.11/site-packages/transformers/tokenization_utils_fast.py:117, in PreTrainedTokenizerFast.__init__(self, *args, **kwargs)

114 fast_tokenizer = copy.deepcopy(tokenizer_object)

115 elif fast_tokenizer_file is not None and not from_slow:

116 # We have a serialization from tokenizers which let us directly build the backend

--> 117 fast_tokenizer = TokenizerFast.from_file(fast_tokenizer_file)

118 elif slow_tokenizer:

119 # We need to convert a slow tokenizer to build the backend

120 fast_tokenizer = convert_slow_tokenizer(slow_tokenizer)

Exception: expected value at line 1 column 1

This error seems to be related to Flash Attention 2. However, jina-embeddings-v3 is normal. And this problem also happens with transformers as well. Looking forward to your reply.

Hi

@dophys

, my guess is that you haven’t pulled the tokenizer.json file from git lfs. When it tries to load, it throws the Exception: expected value at line 1 column 1 error. Can you try running git lfs pull tokenizer.json? I think this should fix your issue.

The issue was that tokenizer.json wasn’t automatically pulled when cloning the model. I just fixed this, so next time you clone, you won’t have to pull it yourself.

Thank you. It works. However a new problem is You are attempting to use Flash Attention 2.0 with a model not initialized on GPU. Make sure to move the model to GPU after initializing it on CPU with model.to('cuda'). It seems that the model is not being stored in the GPU. model.to("cuda") not work.

from sentence_transformers import SentenceTransformer

# 1. Load a pretrained Sentence Transformer model

model = SentenceTransformer("/data/models/jina-embeddings-v4", trust_remote_code=True)

model.to("cuda")

# The sentences to encode

sentences = [

"The weather is lovely today.",

"It's so sunny outside!",

"He drove to the stadium.",

]

# 2. Calculate embeddings by calling model.encode()

embeddings = model.encode(sentences,task="retrieval",prompt_name="query",)

print(embeddings.shape)

# [3, 384]

# 3. Calculate the embedding similarities

similarities = model.similarity(embeddings, embeddings)

print(similarities)

# tensor([[1.0000, 0.6660, 0.1046],

# [0.6660, 1.0000, 0.1411],

# [0.1046, 0.1411, 1.0000]])

Wait, I'm not sure about this problem. Please ignore the above for now.

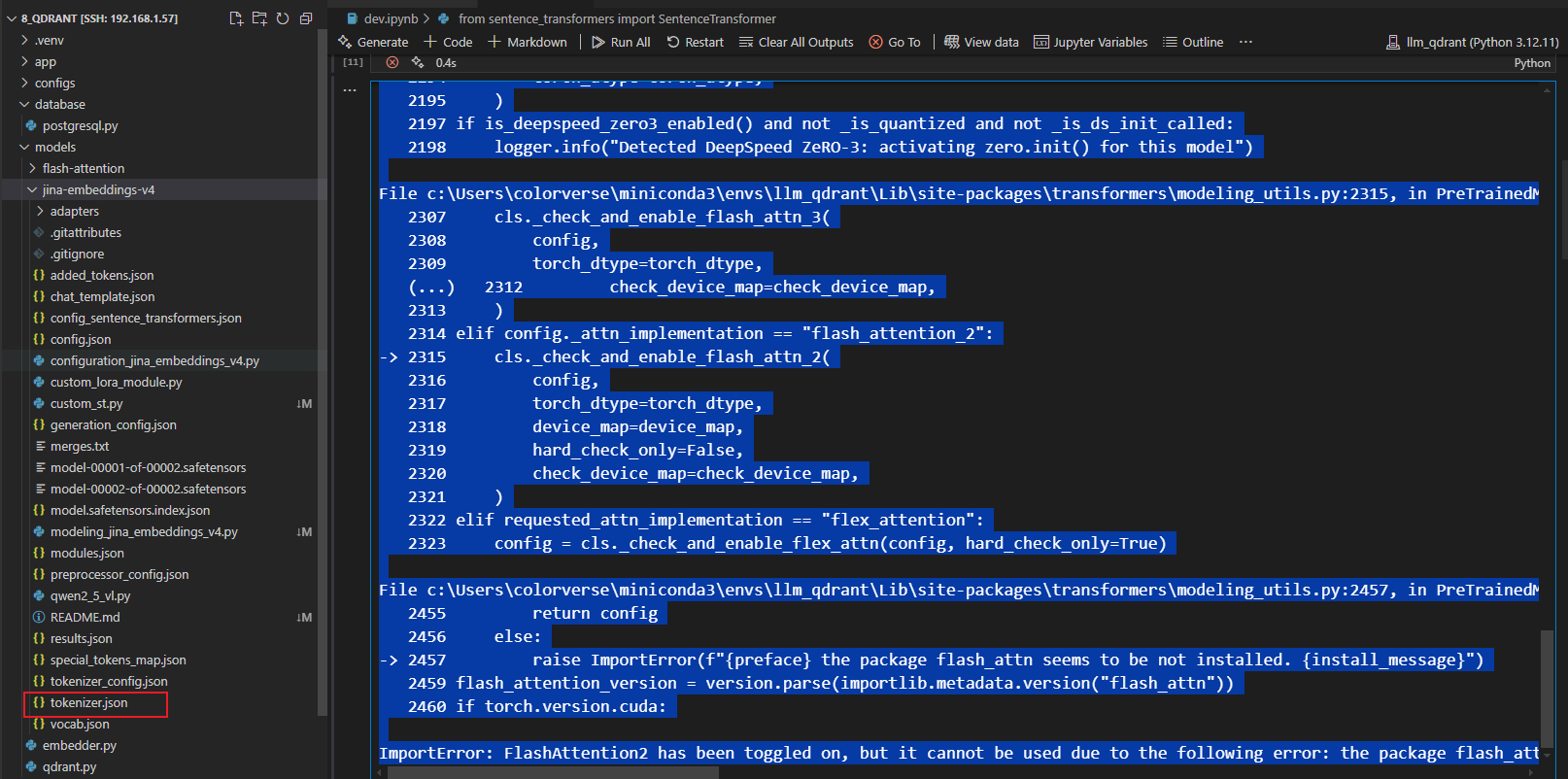

I seem to be encountering a GPU-related error, but I haven't installed Flash Attention 2. It's automatically turning on, even though I didn't install it.

ImportError Traceback (most recent call last)

Cell In[11], line 4

1 from sentence_transformers import SentenceTransformer

3 # 1. Load a pretrained Sentence Transformer model

----> 4 model = SentenceTransformer(str(model_embedding_path), trust_remote_code=True)

6 # The sentences to encode

7 sentences = [

8 "The weather is lovely today.",

9 "It's so sunny outside!",

10 "He drove to the stadium.",

11 ]

File c:\Users\colorverse\miniconda3\envs\llm_qdrant\Lib\site-packages\sentence_transformers\SentenceTransformer.py:309, in SentenceTransformer.init(self, model_name_or_path, modules, device, prompts, default_prompt_name, similarity_fn_name, cache_folder, trust_remote_code, revision, local_files_only, token, use_auth_token, truncate_dim, model_kwargs, tokenizer_kwargs, config_kwargs, model_card_data, backend)

300 model_name_or_path = MODEL_HUB_ORGANIZATION + "/" + model_name_or_path

302 if is_sentence_transformer_model(

303 model_name_or_path,

304 token,

(...) 307 local_files_only=local_files_only,

308 ):

--> 309 modules, self.module_kwargs = self._load_sbert_model(

310 model_name_or_path,

311 token=token,

312 cache_folder=cache_folder,

313 revision=revision,

314 trust_remote_code=trust_remote_code,

315 local_files_only=local_files_only,

316 model_kwargs=model_kwargs,

317 tokenizer_kwargs=tokenizer_kwargs,

318 config_kwargs=config_kwargs,

319 )

320 else:

321 modules = self._load_auto_model(

322 model_name_or_path,

323 token=token,

(...) 330 config_kwargs=config_kwargs,

331 )

File c:\Users\colorverse\miniconda3\envs\llm_qdrant\Lib\site-packages\sentence_transformers\SentenceTransformer.py:1808, in SentenceTransformer._load_sbert_model(self, model_name_or_path, token, cache_folder, revision, trust_remote_code, local_files_only, model_kwargs, tokenizer_kwargs, config_kwargs)

1805 # Try to initialize the module with a lot of kwargs, but only if the module supports them

1806 # Otherwise we fall back to the load method

1807 try:

-> 1808 module = module_class(model_name_or_path, cache_dir=cache_folder, backend=self.backend, **kwargs)

1809 except TypeError:

1810 module = module_class.load(model_name_or_path)

File ~.cache\huggingface\modules\transformers_modules\jina-embeddings-v4\custom_st.py:45, in Transformer.init(self, model_name_or_path, max_seq_length, config_args, model_args, tokenizer_args, cache_dir, backend, **kwargs)

40 if self.default_task and self.default_task not in self.config.task_names:

41 raise ValueError(

42 f"Invalid task: {self.default_task}. Must be one of {self.config.task_names}."

43 )

---> 45 self.model = AutoModel.from_pretrained(

46 model_name_or_path, config=self.config, cache_dir=cache_dir, **model_kwargs

47 )

48 self.processor = AutoProcessor.from_pretrained(

49 model_name_or_path,

50 cache_dir=cache_dir,

51 use_fast=True,

52 **tokenizer_kwargs,

53 )

54 self.max_seq_length = max_seq_length or 8192

File c:\Users\colorverse\miniconda3\envs\llm_qdrant\Lib\site-packages\transformers\models\auto\auto_factory.py:593, in _BaseAutoModelClass.from_pretrained(cls, pretrained_model_name_or_path, *model_args, **kwargs)

591 model_class.register_for_auto_class(auto_class=cls)

592 model_class = add_generation_mixin_to_remote_model(model_class)

--> 593 return model_class.from_pretrained(

594 pretrained_model_name_or_path, *model_args, config=config, **hub_kwargs, **kwargs

595 )

596 elif type(config) in cls._model_mapping.keys():

597 model_class = _get_model_class(config, cls._model_mapping)

File ~.cache\huggingface\modules\transformers_modules\jina-embeddings-v4\modeling_jina_embeddings_v4.py:565, in JinaEmbeddingsV4Model.from_pretrained(cls, pretrained_model_name_or_path, *args, **kwargs)

562 if not is_flash_attn_2_available():

563 kwargs["attn_implementation"] = "sdpa"

--> 565 base_model = super().from_pretrained(

566 pretrained_model_name_or_path, *args, **kwargs

567 )

569 # Configure adapter directory

570 if os.path.isdir(base_model.name_or_path):

File c:\Users\colorverse\miniconda3\envs\llm_qdrant\Lib\site-packages\transformers\modeling_utils.py:311, in restore_default_torch_dtype.._wrapper(*args, **kwargs)

309 old_dtype = torch.get_default_dtype()

310 try:

--> 311 return func(*args, **kwargs)

312 finally:

313 torch.set_default_dtype(old_dtype)

File c:\Users\colorverse\miniconda3\envs\llm_qdrant\Lib\site-packages\transformers\modeling_utils.py:4760, in PreTrainedModel.from_pretrained(cls, pretrained_model_name_or_path, config, cache_dir, ignore_mismatched_sizes, force_download, local_files_only, token, revision, use_safetensors, weights_only, *model_args, **kwargs)

4752 config = cls._autoset_attn_implementation(

4753 config,

4754 torch_dtype=torch_dtype,

4755 device_map=device_map,

4756 )

4758 with ContextManagers(model_init_context):

4759 # Let's make sure we don't run the init function of buffer modules

-> 4760 model = cls(config, *model_args, **model_kwargs)

4762 # Make sure to tie the weights correctly

4763 model.tie_weights()

File ~.cache\huggingface\modules\transformers_modules\jina-embeddings-v4\modeling_jina_embeddings_v4.py:142, in JinaEmbeddingsV4Model.init(self, config)

141 def init(self, config: JinaEmbeddingsV4Config):

--> 142 Qwen2_5_VLForConditionalGeneration.init(self, config)

143 self._init_projection_layer(config)

144 self.post_init()

File ~.cache\huggingface\modules\transformers_modules\jina-embeddings-v4\qwen2_5_vl.py:2121, in Qwen2_5_VLForConditionalGeneration.init(self, config)

2119 def init(self, config):

2120 super().init(config)

-> 2121 self.model = Qwen2_5_VLModel(config)

2122 self.lm_head = nn.Linear(config.text_config.hidden_size, config.text_config.vocab_size, bias=False)

2124 self.post_init()

File ~.cache\huggingface\modules\transformers_modules\jina-embeddings-v4\qwen2_5_vl.py:1709, in Qwen2_5_VLModel.init(self, config)

1707 super().init(config)

1708 self.visual = Qwen2_5_VisionTransformerPretrainedModel._from_config(config.vision_config)

-> 1709 self.language_model = Qwen2_5_VLTextModel._from_config(config.text_config)

1710 self.rope_deltas = None # cache rope_deltas here

1712 # Initialize weights and apply final processing

File c:\Users\colorverse\miniconda3\envs\llm_qdrant\Lib\site-packages\transformers\modeling_utils.py:311, in restore_default_torch_dtype.._wrapper(*args, **kwargs)

309 old_dtype = torch.get_default_dtype()

310 try:

--> 311 return func(*args, **kwargs)

312 finally:

313 torch.set_default_dtype(old_dtype)

File c:\Users\colorverse\miniconda3\envs\llm_qdrant\Lib\site-packages\transformers\modeling_utils.py:2191, in PreTrainedModel._from_config(cls, config, **kwargs)

2189 config._attn_implementation = kwargs.pop("attn_implementation", attn_implementation)

2190 if not getattr(config, "_attn_implementation_autoset", False):

-> 2191 config = cls._autoset_attn_implementation(

2192 config,

2193 check_device_map=False,

2194 torch_dtype=torch_dtype,

2195 )

2197 if is_deepspeed_zero3_enabled() and not _is_quantized and not _is_ds_init_called:

2198 logger.info("Detected DeepSpeed ZeRO-3: activating zero.init() for this model")

File c:\Users\colorverse\miniconda3\envs\llm_qdrant\Lib\site-packages\transformers\modeling_utils.py:2315, in PreTrainedModel._autoset_attn_implementation(cls, config, torch_dtype, device_map, check_device_map)

2307 cls._check_and_enable_flash_attn_3(

2308 config,

2309 torch_dtype=torch_dtype,

(...) 2312 check_device_map=check_device_map,

2313 )

2314 elif config._attn_implementation == "flash_attention_2":

-> 2315 cls._check_and_enable_flash_attn_2(

2316 config,

2317 torch_dtype=torch_dtype,

2318 device_map=device_map,

2319 hard_check_only=False,

2320 check_device_map=check_device_map,

2321 )

2322 elif requested_attn_implementation == "flex_attention":

2323 config = cls._check_and_enable_flex_attn(config, hard_check_only=True)

File c:\Users\colorverse\miniconda3\envs\llm_qdrant\Lib\site-packages\transformers\modeling_utils.py:2457, in PreTrainedModel._check_and_enable_flash_attn_2(cls, config, torch_dtype, device_map, check_device_map, hard_check_only)

2455 return config

2456 else:

-> 2457 raise ImportError(f"{preface} the package flash_attn seems to be not installed. {install_message}")

2459 flash_attention_version = version.parse(importlib.metadata.version("flash_attn"))

2460 if torch.version.cuda:

ImportError: FlashAttention2 has been toggled on, but it cannot be used due to the following error: the package flash_attn seems to be not installed. Please refer to the documentation of https://huggingface.co/docs/transformers/perf_infer_gpu_one#flashattention-2 to install Flash Attention 2.

i got the same errorImportError: FlashAttention2 has been toggled on, but it cannot be used due to the following error: the package flash_attn seems to be not installed. Please refer to the documentation of https://huggingface.co/docs/transformers/perf_infer_gpu_one#flashattention-2 to install Flash Attention 2.