initial commit

Browse files- README.md +169 -0

- demo/.DS_Store +0 -0

- demo/GeorgiaTech_RGB.png +0 -0

- demo/comparison_mae.jpeg +0 -0

- demo/nerf-mae_architecture.jpg +0 -0

- demo/nerf-mae_teaser.jpeg +0 -0

- demo/nerf-mae_teaser.png +0 -0

- demo/tri-logo.png +0 -0

- front3d_obb_finetuned.pt +3 -0

- nerf_mae_pretrained.pt +3 -0

- scannet_obb_finetuned.pt +3 -0

README.md

CHANGED

|

@@ -1,3 +1,172 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

license: cc-by-nc-4.0

|

| 3 |

---

|

|

|

|

| 1 |

+

<!-- # NeRF-MAE: Masked AutoEncoders for Self-Supervised 3D Representation Learning for Neural Radiance Fields -->

|

| 2 |

+

|

| 3 |

+

<div align="center">

|

| 4 |

+

<img src="demo/nerf-mae_teaser.png" width="85%">

|

| 5 |

+

<img src="demo/nerf-mae_teaser.jpeg" width="85%">

|

| 6 |

+

</div>

|

| 7 |

+

<!-- <p align="center">

|

| 8 |

+

<img src="demo/nerf-mae_teaser.jpeg" width="100%">

|

| 9 |

+

</p> -->

|

| 10 |

+

|

| 11 |

+

<br>

|

| 12 |

+

<div align="center">

|

| 13 |

+

|

| 14 |

+

[](https://arxiv.org/abs/2404.01300)

|

| 15 |

+

[](https://nerf-mae.github.io)

|

| 16 |

+

[](https://pytorch.org/)

|

| 17 |

+

[](https://github.com/zubair-irshad/NeRF-MAE?tab=readme-ov-file#citation)

|

| 19 |

+

[](https://youtu.be/D60hlhmeuJI?si=d4RfHAwBJgLJXdKj)

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

</div>

|

| 25 |

+

|

| 26 |

+

---

|

| 27 |

+

|

| 28 |

+

<a href="https://www.tri.global/" target="_blank">

|

| 29 |

+

<img align="right" src="demo/GeorgiaTech_RGB.png" width="18%"/>

|

| 30 |

+

</a>

|

| 31 |

+

|

| 32 |

+

<a href="https://www.tri.global/" target="_blank">

|

| 33 |

+

<img align="right" src="demo/tri-logo.png" width="17%"/>

|

| 34 |

+

</a>

|

| 35 |

+

|

| 36 |

+

### [Project Page](https://nerf-mae.github.io/) | [arXiv](https://arxiv.org/abs/2308.12967) | [PDF](https://arxiv.org/pdf/2308.12967.pdf)

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

**NeRF-MAE : Masked AutoEncoders for Self-Supervised 3D Representation Learning for Neural Radiance Fields**

|

| 41 |

+

|

| 42 |

+

<a href="https://zubairirshad.com"><strong>Muhammad Zubair Irshad</strong></a>

|

| 43 |

+

·

|

| 44 |

+

<a href="https://zakharos.github.io/"><strong>Sergey Zakharov</strong></a>

|

| 45 |

+

·

|

| 46 |

+

<a href="https://www.linkedin.com/in/vitorguizilini"><strong>Vitor Guizilini</strong></a>

|

| 47 |

+

·

|

| 48 |

+

<a href="https://adriengaidon.com/"><strong>Adrien Gaidon</strong></a>

|

| 49 |

+

·

|

| 50 |

+

<a href="https://faculty.cc.gatech.edu/~zk15/"><strong>Zsolt Kira</strong></a>

|

| 51 |

+

·

|

| 52 |

+

<a href="https://www.tri.global/about-us/dr-rares-ambrus"><strong>Rares Ambrus</strong></a>

|

| 53 |

+

<br> **European Conference on Computer Vision, ECCV 2024**<br>

|

| 54 |

+

|

| 55 |

+

<b> Toyota Research Institute | Georgia Institute of Technology</b>

|

| 56 |

+

|

| 57 |

+

## 💡 Highlights

|

| 58 |

+

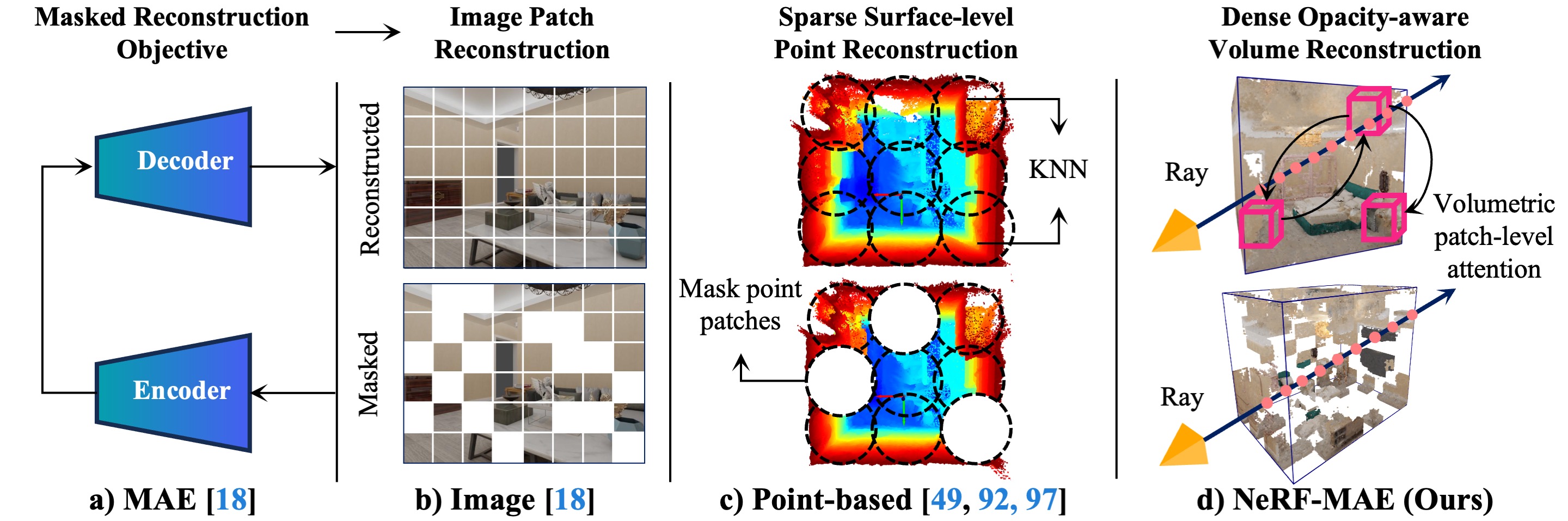

- **NeRF-MAE**: The first large-scale pretraining utilizing Neural Radiance Fields (NeRF) as an input modality. We pretrain a single Transformer model on thousands of NeRFs for 3D representation learning.

|

| 59 |

+

- **NeRF-MAE Dataset**: A large-scale NeRF pretraining and downstream task finetuning dataset.

|

| 60 |

+

|

| 61 |

+

## Citation

|

| 62 |

+

|

| 63 |

+

If you find this repository or our dataset useful, please star ⭐ this repository and consider citing 📝:

|

| 64 |

+

|

| 65 |

+

```

|

| 66 |

+

@inproceedings{irshad2024nerfmae,

|

| 67 |

+

title={NeRF-MAE: Masked AutoEncoders for Self-Supervised 3D Representation Learning for Neural Radiance Fields},

|

| 68 |

+

author={Muhammad Zubair Irshad and Sergey Zakharov and Vitor Guizilini and Adrien Gaidon and Zsolt Kira and Rares Ambrus},

|

| 69 |

+

booktitle={European Conference on Computer Vision (ECCV)},

|

| 70 |

+

year={2024}

|

| 71 |

+

}

|

| 72 |

+

```

|

| 73 |

+

|

| 74 |

+

### Contents

|

| 75 |

+

- [🌇 Environment](#-environment)

|

| 76 |

+

- [⛳ Model Usage and Checkpoints](#-model-usage-and-checkpoints)

|

| 77 |

+

- [🗂️ Dataset](#-dataset)

|

| 78 |

+

|

| 79 |

+

## 🌇 Environment

|

| 80 |

+

|

| 81 |

+

Create a python 3.7 virtual environment and install requirements:

|

| 82 |

+

|

| 83 |

+

```bash

|

| 84 |

+

cd $NeRF-MAE repo

|

| 85 |

+

conda create -n nerf-mae python=3.9

|

| 86 |

+

conda activate nerf-mae

|

| 87 |

+

pip install --upgrade pip

|

| 88 |

+

pip install -r requirements.txt

|

| 89 |

+

pip install torch==1.12.1+cu113 torchvision==0.13.1+cu113 -f https://download.pytorch.org/whl/torch_stable.html

|

| 90 |

+

```

|

| 91 |

+

The code was built and tested on **cuda 11.3**

|

| 92 |

+

|

| 93 |

+

Compile CUDA extension, to run downstream task finetuning, as described in [NeRF-RPN](https://github.com/lyclyc52/NeRF_RPN):

|

| 94 |

+

|

| 95 |

+

```bash

|

| 96 |

+

cd $NeRF-MAE repo

|

| 97 |

+

cd nerf_rpn/model/rotated_iou/cuda_op

|

| 98 |

+

python setup.py install

|

| 99 |

+

cd ../../../..

|

| 100 |

+

|

| 101 |

+

```

|

| 102 |

+

|

| 103 |

+

## ⛳ Model Usage and Checkpoints

|

| 104 |

+

|

| 105 |

+

NeRF-MAE is structured to provide easy access to pretrained NeRF-MAE models (and reproductions), to facilitate use for various downstream tasks. This is for extracting good visual features from NeRFs if you don't have resources for large-scale pretraining. Our pretraining provides an easy-to-access embedding of any NeRF scene, which can be used for a variety of downstream tasks in a straightforwaed way.

|

| 106 |

+

|

| 107 |

+

We have released 1.pretrained and 2. finetuned checkpoints to start using our codebase out-of-the-box. Below is a sample useage of our model with spelled out comments in a few lines of code:

|

| 108 |

+

|

| 109 |

+

```

|

| 110 |

+

import torch

|

| 111 |

+

# Load data from the specified folder and filename with the given resolution.

|

| 112 |

+

res, rgbsigma = load_data(folder_name, filename, resolution=args.resolution)

|

| 113 |

+

|

| 114 |

+

# Build the model using provided arguments.

|

| 115 |

+

model = build_model(args)

|

| 116 |

+

|

| 117 |

+

# Load checkpoint if provided.

|

| 118 |

+

if args.checkpoint:

|

| 119 |

+

model.load_state_dict(torch.load(args.checkpoint, map_location="cpu")["state_dict"])

|

| 120 |

+

model.eval() # Set model to evaluation mode.

|

| 121 |

+

|

| 122 |

+

# Run inference getting the features out for downsteam usage

|

| 123 |

+

with torch.no_grad():

|

| 124 |

+

pred = model([rgbsigma], is_eval=True)[3] # Extract only predictions.

|

| 125 |

+

|

| 126 |

+

```

|

| 127 |

+

|

| 128 |

+

### 1. How to plug these features for downstream 3D bounding detection from NeRFs (i.e. plug-and-play with a [NeRF-RPN](https://github.com/lyclyc52/NeRF_RPN) OBB prediction head)

|

| 129 |

+

|

| 130 |

+

Please also see the section on [Finetuning](#-finetuning). Our released finetuned checkpoint achieves state-of-the-art on 3D object detection in NeRFs. To run evaluation using our finetuned checkpoint on the dataset provided by NeRF-RPN, please run the below script, after updating the paths to the pretrained checkpoint i.e. --checkpoint and DATA_ROOT depending on evaluation done for ```Front3D``` or ```Scannet```:

|

| 131 |

+

|

| 132 |

+

```

|

| 133 |

+

bash test_fcos_pretrained.sh

|

| 134 |

+

```

|

| 135 |

+

|

| 136 |

+

Also see the cooresponding run file i.e. ```run_fcos_pretrained.py``` and our model adaptation i.e. ```SwinTransformer_FPN_Pretrained_Skip```. This is a minimal adaptation to plug and play our weights with a NeRF-RPN architecture and achieve significant boost in performance.

|

| 137 |

+

|

| 138 |

+

|

| 139 |

+

## 🗂️ Dataset

|

| 140 |

+

|

| 141 |

+

Download the preprocessed datasets here.

|

| 142 |

+

|

| 143 |

+

- Pretraining dataset (comprising NeRF radiance and density grids). [Download link](https://s3.amazonaws.com/tri-ml-public.s3.amazonaws.com/github/nerfmae/NeRF-MAE_pretrain.tar.gz)

|

| 144 |

+

- Finetuning dataset (comprising NeRF radiance and density grids and bounding box/semantic labelling annotations). [3D Object Detection (Provided by NeRF-RPN)](https://drive.google.com/drive/folders/1q2wwLi6tSXu1hbEkMyfAKKdEEGQKT6pj), [3D Semantic Segmentation (Coming Soon)](), [Voxel-Super Resolution (Coming Soon)]()

|

| 145 |

+

|

| 146 |

+

|

| 147 |

+

Extract pretraining and finetuning dataset under ```NeRF-MAE/datasets```. The directory structure should look like this:

|

| 148 |

+

|

| 149 |

+

```

|

| 150 |

+

NeRF-MAE

|

| 151 |

+

├── pretrain

|

| 152 |

+

│ ├── features

|

| 153 |

+

│ └── nerfmae_split.npz

|

| 154 |

+

└── finetune

|

| 155 |

+

└── front3d_rpn_data

|

| 156 |

+

├── features

|

| 157 |

+

├── aabb

|

| 158 |

+

└── obb

|

| 159 |

+

```

|

| 160 |

+

|

| 161 |

+

|

| 162 |

+

Note: The above datasets are all you need to train and evaluate our method. Bonus: we will be releasing our multi-view rendered posed RGB images from FRONT3D, HM3D and Hypersim as well as Instant-NGP trained checkpoints soon (these comprise over 1M+ images and 3k+ NeRF checkpoints)

|

| 163 |

+

|

| 164 |

+

Please note that our dataset was generated using the instruction from [NeRF-RPN](https://github.com/lyclyc52/NeRF_RPN) and [3D-CLR](https://vis-www.cs.umass.edu/3d-clr/). Please consider citing our work, NeRF-RPN and 3D-CLR if you find this dataset useful in your research.

|

| 165 |

+

|

| 166 |

+

Please also note that our dataset uses [Front3D](https://arxiv.org/abs/2011.09127), [Habitat-Matterport3D](https://arxiv.org/abs/2109.08238), [HyperSim](https://github.com/apple/ml-hypersim) and [ScanNet](https://www.scan-net.org/) as the base version of the dataset i.e. we train a NeRF per scene and extract radiance and desnity grid as well as aligned NeRF-grid 3D annotations. Please read the term of use for each dataset if you want to utilize the posed multi-view images for each of these datasets.

|

| 167 |

+

|

| 168 |

+

### For More details, please checkout out Paper, Github and Project Page!

|

| 169 |

+

|

| 170 |

---

|

| 171 |

license: cc-by-nc-4.0

|

| 172 |

---

|

demo/.DS_Store

ADDED

|

Binary file (6.15 kB). View file

|

|

|

demo/GeorgiaTech_RGB.png

ADDED

|

demo/comparison_mae.jpeg

ADDED

|

demo/nerf-mae_architecture.jpg

ADDED

|

demo/nerf-mae_teaser.jpeg

ADDED

|

demo/nerf-mae_teaser.png

ADDED

|

demo/tri-logo.png

ADDED

|

front3d_obb_finetuned.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d7f0dee865f8629eee475cb87643c6af67fddefed8c9df9bf6d9414cd761e269

|

| 3 |

+

size 314063759

|

nerf_mae_pretrained.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:713abb59691adbcc16e805cf6e42d8360e79113f0fd82ed8a8514350a7b60e05

|

| 3 |

+

size 305663555

|

scannet_obb_finetuned.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:bef943ef054ef0904e9fa5a0075a3f76be5274bf7dff11699b5a6f741dc987fa

|

| 3 |

+

size 314063759

|